Note

In this article I have discussed the various types of activation functions and what are the types of problems one might encounter while using each of them.

I would suggest to begin with a ReLU function and explore other functions as you move further. You can also design your own activation functions giving a non-linearity component to your network.

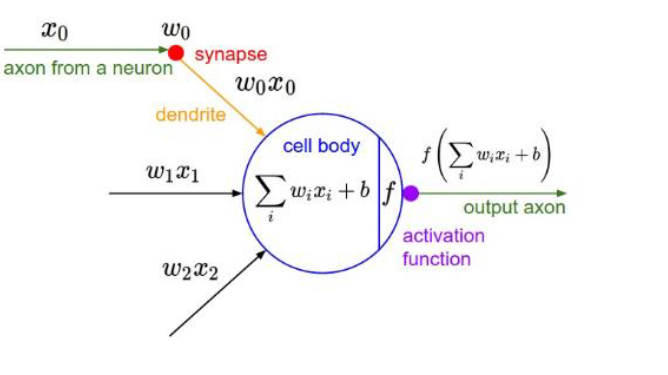

Recall that inputs x0,x1,x2,x3……xn and weights w0,w1,w2,w3………wn are multiplied and added with bias term to form our input.

Clearly W implies how much weight or strength we want to give our incoming input and we can think** b** as an offset value, making x*w have to reach an offset value before having an effect.

As far we have seen the inputs so now what is activation function?

Activation function is used to set the boundaries for the overall output value.For Example:-let **z=X*w+b **be the output of the previous layer then it will be sent to the activation function for limit it’svalue between 0 and 1(if binary classification problem).

Finally, the output from the activation function moves to the next hidden layer and the same process is repeated. This forward movement of information is known as the forward propagation.

What if the output generated is far away from the actual value? Using the output from the forward propagation, error is calculated. Based on this error value, the weights and biases of the neurons are updated. This process is known as back-propagation.

#activation-functions #softmax #sigmoid-function #neural-networks #relu #function