Central Limit Theorem performs a significant part in statistical inference. It depicts precisely how much an increase in sample size diminishes sampling error, which tells us about the precision or margin of error for estimates of statistics, for example, percentages, from samples.

Statistical inference depends on the possibility that it is conceivable to take a broad view of results from a sample to the population. How might we guarantee that relations seen in an example are not just because of the possibility?

Significance tests are intended to offer a target measure to inform decisions about the validity of the broad view. For instance, one can locate a negative relationship in a sample between education and income. However, added information is essential to show that the outcome isn’t just because of possibility, yet that it is statistically significant.

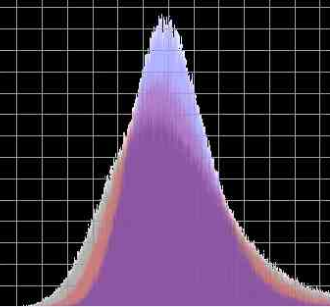

The Central Limit Theorem (CLT) is a mainstay of statistics and probability. The theorem expresses that as the size of the sample expands, the distribution of the mean among multiple samples will be like a Gaussian distribution.

We can think of doing a trial and getting an outcome or an observation. We can rehash the test again and get another independent observation. Accumulated, numerous observations represent a sample of observations.

On the off chance that we calculate the mean of a sample, it will approximate the mean of the population distribution. In any case, like any estimate, it will not be right and will contain some mistakes. On the off chance that we draw numerous independent samples, and compute their means, the distribution of those means will shape a Gaussian distribution.

#sampling #probability #statistics #machine-learning #data-science