1. Introduction

Most of the times, the cause of poor performance for a machine learning (ML) model is either overfitting or underfitting. A good model should be able to generalize and overcome both the overfitting and underfitting problems. But what is overfitting? But what is underfitting? When does it mean for a model to be able to generalize the learned function/rule ?

Read on and you will be able to answer all these questions.

2. What is generalization in ML

Generalization of a ML model refers to how well the rules/patterns/functions learned by the ML model, apply to specific examples not seen by the model when it was learning. This is usually called the unseen set or the test set.

The goal of a good ML model is to generalize well from the training data to any data that is coming from the problem’s domain. This allows the prediction of some data that the model has NEVER seen before (i.e. making prediction in the future).

3. What is Overfitting in Machine Learning

Overfitting means that our ML model is modeling (has learned) the training data too well.

Formally, overfitting referes to the situation where a model learns the data but also the noise that is part of training data to the extent that it negatively impacts the performance of the model on new unseen data.

In other worlds, the noise (i.e. random fluctuations) in the training set is learned as rules/pattenrs by the model. However, these noisy learned representations do not apply to new unseen data and thus, the model’s performance (i.e. accuracy, MSE, MAE) is negatively impacted.

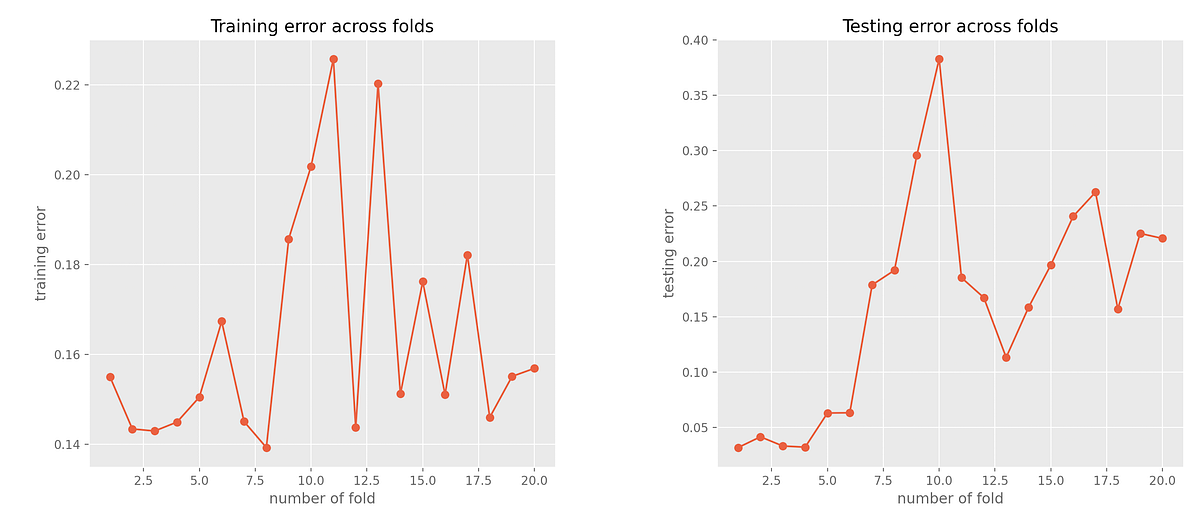

_A textbook case of overfitting is when the model’s error on the training set (i.e. during training) is very low but then, the model’s error on the test set (i.e. unseen samples) is large!

4. What is Underfitting in Machine Learning

Underfitting means that our ML model can neither model the training data nor generalize to new unseen data.

A model that underfits the data will have poor performance on the training data. For example, in a scenario where someone would use a linear model to capture non-linear trends in the data, the model would underfit the data.

A textbook case of underfitting is when the model’s error on both the training and test sets (i.e. during training and testing) is very high.

#machine-learning #underfitting #overfitting #neural-networks #data-science #python