This post deals with a very common question in Machine Learning, i.e. “How do I get the final model given training data so that it can perform/generalize well on the test set?” or “How do I get the predictive model using k-fold Cross-validation?”. The common answers to these common questions are Cross-validation and/or Ensemble Methods. But again, I rarely found any post combining all the details which tell step by step procedure to perform the same. Here, I have attempted to combine all the details available from various posts/blogs to the simplest possible way to reduce the troubles.

So, from the above story, one can conclude as follows:

Step1: Perform k-fold cross-validation on training data to estimate which method (model) is better.

Step2: Perform k-fold cross-validation on training data to estimate which value of hyper-parameter is better.

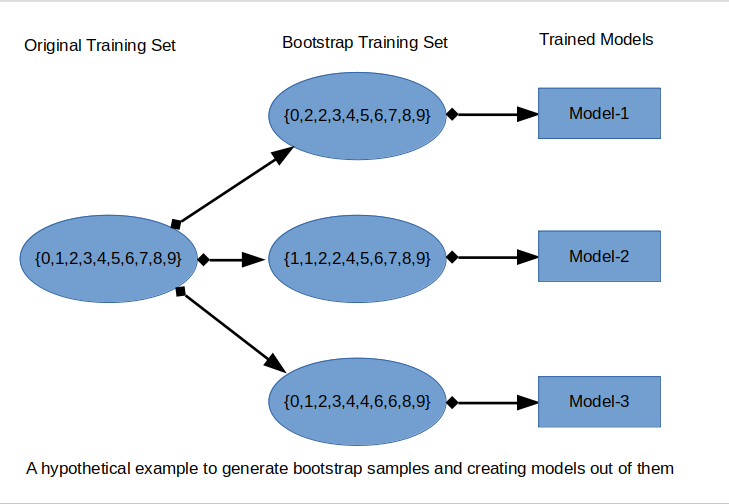

Step3: Apply ensemble methods on entire training data using the method (model) and hyper-parameters obtained by cross-validation to get the final predictive model.

Let’s discuss each step in detail one be one:

Step1:

Let’s start with a definition of Cross-validation:

“Cross-validation is a technique for evaluating ML models by training several ML models on subsets of the available input data and evaluating them on the complementary subset of the data. Use cross-validation to detect overfitting, i.e., failing to generalize a pattern.”[1]

Now, let me point out a general mistake people do while reading the definition. In the definition “ML models” refer to a particular method ( Linear Discriminant Analysis (LDA) or Support Vector Machine or CNN or LSTM) but not the particular instances of the method as different models. So we might say ‘we have a SVM model’ but we should not call two different sets of the trained coefficients as different models. At least not in the context of model selection[2].

Now, Let us assume that we have two models, say a SVM and a FNN. Our task is to define which model is better? We can do k-fold cross-validation and see which one proves overall better at predicting the validation set points. But once we have used cross-validation to select the better performing model, we train that model (whether it be the SVM or the FNN) on entire training data (how do we train will be discussed later). We do not use the actual model instances we trained during cross-validation for our final predictive model.

So, when we do k-fold cross validation, essentially we are measuring the capacity of our model and how well our model is able to get trained by some data and then make predictions on the data it has not seen before. To know more about various types of cross-validation and their advantage, please read this article.

#bagging #ensemble-method #crossvalidation #bootstrapping #machine-learning #bootstrap