Let’s understand why an explainable AI is making lot of fuss nowadays. Consider an example a person(consumer) Mr. X goes to bank for a personal loan and bank takes his demographic details, credit bureau details and last 6 month bank statement. After taking all the documents bank runs this on their production deployed machine Learning Model for checking whether this person will default on loan or not.

A complex ML model which is deployed on their production says that this person has 55% chances of getting default on his loan and subsequently bank rejects Mr. X personal loan application.

Now Mr X is very angry and puzzled about his application rejection. So he went to bank manager for the explanation why his personal loan application got rejected. He looks his application and got puzzled that his application is good for granting a loan but why model has predicted false. This chaos has created doubt in manager’s mind about each loan that was previously rejected by the machine learning model. Although accuracy of the model is more than 98% percentage. But still it fails to gain the trust.

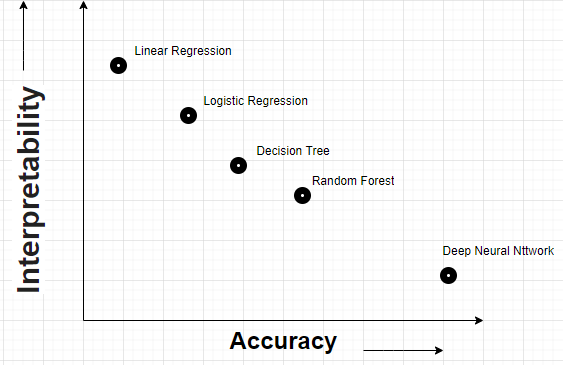

Every data scientist wants to deploy model on production which has highest accuracy in prediction of output. Below is the graph shown between interpretation and accuracy of the model.

Interpreability Vs Accuracy of the Model

If you notice the increasing the accuracy of the model the interpreability of the model decrease significantly and that obstructs complex model to be used in production.

This is where Explainable AI rescue us. In Explainable AI does not only predict the outcome it also explain the process and features included to reach at the conclusion. Isn’t great right that model is explaining itself.

ML and AI application has reached to almost in each industry like Banking & Finance, Healthcare, Manufacturing, E commerce, etc. But still people are afraid to use the complex model in their field just because of they think that the complex machine learning model are black box and will not able to explain the output to businesses and stakeholders. I hope until now you have understood why Explainable AI is required for better and efficient use of machine learning and deep learning models.

Now, Let’s understand what is Explainable AI and How does it works ?

Explainable AI is set of tools and methods in Artificial Intelligence (AI) to explain the model output process that how an model has reached to particular output for a given data points.

Consider the above example where Mr. X loan has rejected and Bank Manager is not able to figure out why his application got rejected.Here an explainable can give the important features and their importance considered by the model to reach at this output. So now Manager has his report,

- He has more confidence on the model and it’s output.

- He can use more complex model as he is able to explain the output of the model to business and stakeholders.

- Now Mr. X got an explanation from bank about their loan rejection. He exactly knows what needs to be improved in order to get loan from the banks

#explainable-ai #explainability #artificial-intelligence #machine-learning-ai #machine-learning #deep learning