Natural Language Processing (NLP) is commonly used in text classification tasks such as spam detection and sentiment analysis, text generation, language translations and document classification. Text data can be considered either in sequence of character, sequence of words or sequence of sentences. Most commonly, text data are considered as sequence of words for most problems. In this article we will delve into, pre-processing using simple example text data. However, the steps discussed here are applicable to any NLP tasks. Particularly, we’ll use TensorFlow2 Keras for text pre-processing which include:

- Tokenization

- Sequencing

- Padding

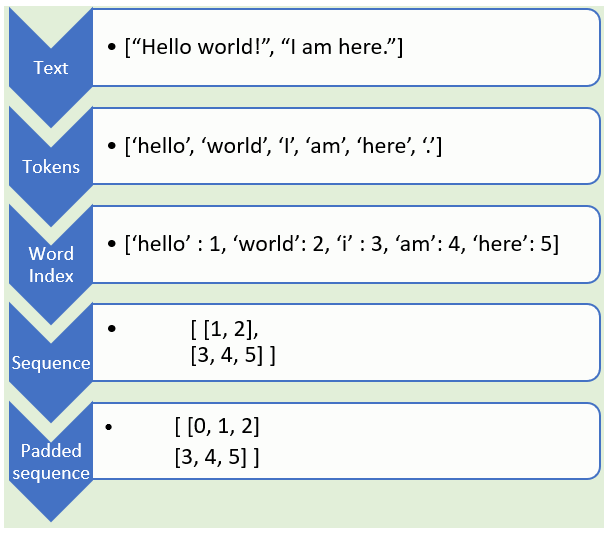

The figure below depicts the process of text pre-processing along with example outputs.

Step by step text pre-processing example starting from raw sentence to padded sequence

First, let’s import the required libraries. (A complete Jupyter notebook is available in my GitHub page).

import tensorflow as tf

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

Tokenizer is an API available in TensorFlow Keras which is used to tokenize sentences. We have defined our text data as sentences (each separated by a comma) and with an array of strings. There are 4 sentences including 1 with a maximum length of 5. Our text data also includes punctuations as shown below.

sentences = ["I want to go out.",

" I like to play.",

" No eating - ",

"No play!",

]

sentences

['I want to go out.', ' I like to play.', ' No eating - ', 'No play!']

Tokenization

As deep learning models do not understand text, we need to convert text into numerical representation. For this purpose, a first step is Tokenization. The Tokenizer API from TensorFlow Keras splits sentences into words and encodes these into integers. Below are hyperparameters used within Tokenizer API:

- num_words: Limits maximum number of most popular words to keep while training.

- filters: If not provided, by default filters out all punctuation terms (!”#$%&()*+,-./:;<=>?@[]^_’{|}~\t\n).

- lower=1. This is a default setting which converts all words to lower case

- oov_tok : When its used, out of vocabulary token will be added to word index in the corpus which is used to build the model. This is used to replace out of vocabulary words (words that are not in our corpus) during text_to_sequence calls (see below).

- word_index: Convert all words to integer index. Full list of words are available as key value property: key = word and value = token for that word

#nlp #data-science #tensorflow #deep learning