We live in a world where we are constantly in contact with Artificial Intelligence, perhaps without even being aware.

It may not seem that way due to the stigma that Hollywood has put into our mind about what exactly Artificial Intelligence is (killer robots, omniscient software, etc.) but it’s really a lot simpler than that. John McCarthy (2007) defined Artificial Intelligence as the science and engineering of making intelligent [having the computational ability to achieve goals in the world] machines.

Right now, the main way in which these machines “learn” is through rote learning (trail and error) and drawing inferences. It is widely believed that “AI [artificial intelligence] will drive the human race” (Prime Minister Navendra Modi) and there is not true evidence for or against the contrary, but it is widely accepted that A.I. does and will have a extreme influence on day to day life. It would have to be integrated into society, and for that to work then laws would have to be made and reformed to provide a smooth transition. This requires, not only a deep understanding of what A.I. is and how it works, but also starting to discuss changes in regulations; What changes need to be made and how to maintain safety while maximizing effectiveness. That’s where usefulness comes into play; will the adoptions of this technology really make as much of a difference as people say?

Leading intellectuals such as Elon Musk and Stephen Hawking both warn against the technology and highly urge regulation be put into place before its “too late”. The main concern amongst the people is the concern for safety, and this ties directly into the questions of ethics. How will humans program ethics (something we as humans are still trying to understand ourselves) into a machine? How will this change how we look at crime? The typical way of judging crime and punishment (through determining liability in front of a jury of peers) would have to be restructured.

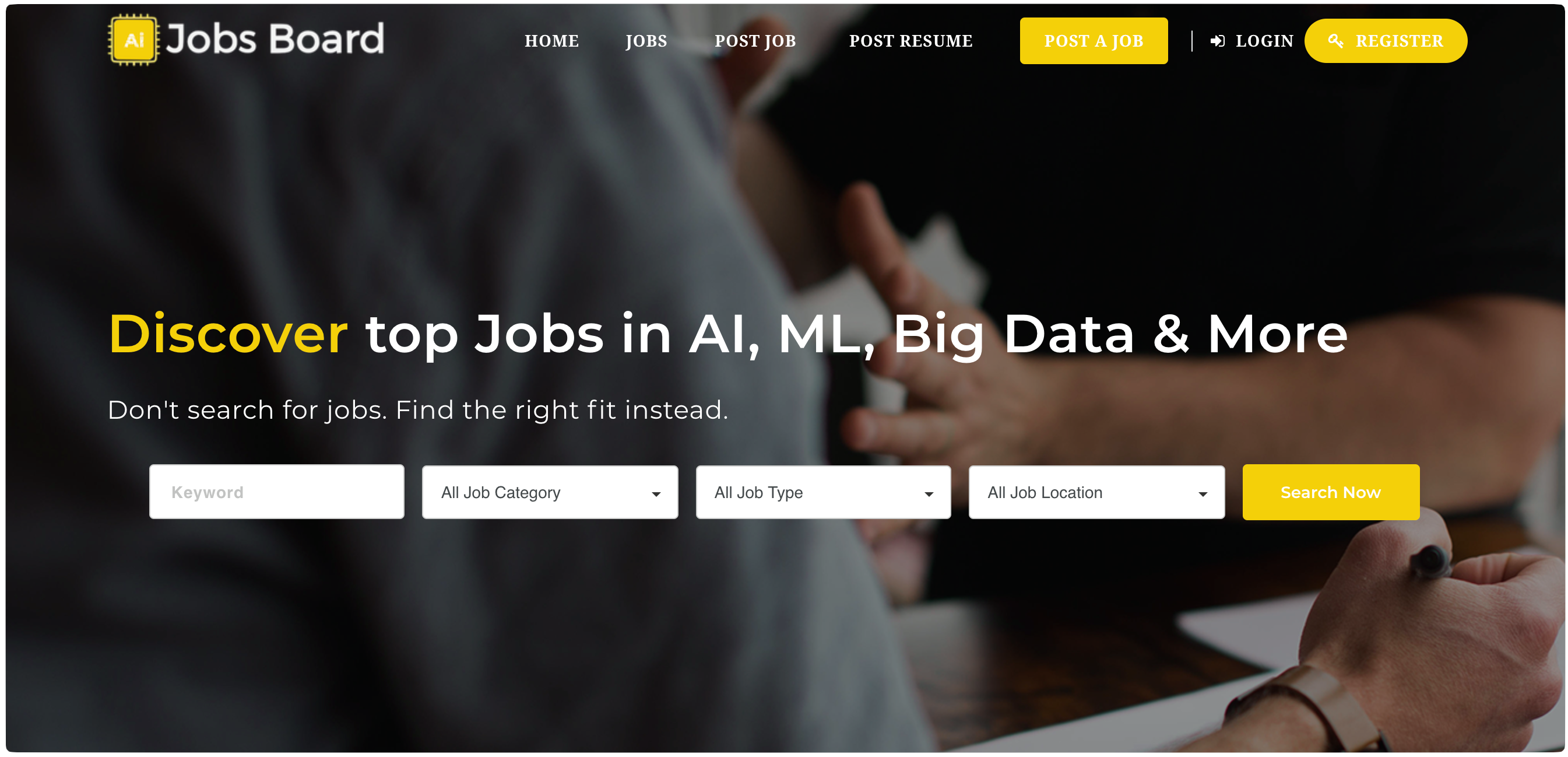

Jobs in AI

The question of how to regulate Artificially Intelligent machines is not something that only Engineering, and Programming students talk about in debates and papers. The White House’s National Science and Technology Council Committee released a 48-page treatise, Preparing for the Future of Artificial Intelligence, examining the potential impact of AI on society and even policy recommendations to handle it. The United States main concern with AI in the short term would be to prevent an AI weaponry arms race around the world. The United States’ government and several international governments are currently working on robotic law enforcers and even AI soldiers.

But the thing is…the matter of crime involving AI is still evolving. Traditionally interactions with intelligent machines and robots would hold liability to the owner. However, considering the level of intellect in the machines, many believe that a Declaration of Intent needs to be made and given for these machines. The main problem argued with this however is that Declarations of Intent require a certain level of cognition and a sense of judgement, something that many believe these machines do not currently have. Jeremy Elman states:

“After considering the ability of the court system, the most likely reality is that the world will need to adopt a standard for AI where the manufacturers and developers agree to abide by general ethical guidelines, such as through a technical standard mandated by treaty or international regulation”.

Technologists often go back to Asimov’s 3 Laws of Robotics as a starting point for programming a certain level of rules and regulations directly into these machines. These laws include: a robot not harming a human or letting a human come to harm, a robot must follow the orders of a human except where it conflicts with the 1st law, and a robot must protect its existence except where it conflicts with the 1st two laws.

#ai #legality #technology #machine-learning #artificial-intelligence #deep learning