PyTorch’s AutoGrad

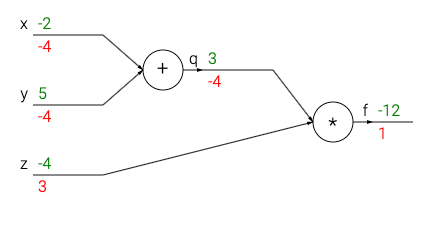

PyTorch’s AutoGrad is a very powerful feature with which we can easily find the differentiation of a variable with respect to another. This comes handy while calculating gradients for gradient descent algorithm

How to use this feature

first and foremost , let’s import the necessary libraries

#importing the libraries

import torch

import numpy as np

import matplotlib.pyplot as plt

import random

x = torch.tensor(5.) #some data

w = torch.tensor(4.,requires_grad=True) #weight ( slope )

b = torch.tensor(2.,requires_grad=True) #bias (intercept)

y = x*w + b #equation of a line

y.backward() #letting pytorch know that Y is the variable that needs to be differentiated

print(w.grad,b.grad) #prints the derivative of Y with respect to w and b

output:

tensor(5.) tensor(1.)

This is the basic idea behind PyTorch’s AutoGrad.

the** backward()** function specify the variable to be differentiated

and the .grad prints the differentiation of that function with respect to the variable.

#simple-linear-regression #pytorch #autograd #deep-learning #machine-learning

2.85 GEEK