Summary: key concepts of popular language model capabilities

We all are aware about the current revolution in field of Artificial Intelligence(AI) and Natural Language Processing(NLP) is one of the major contributor.

For the NLP related tasks, where we build technique related to human and computer interaction, we first develop a language specific understanding in our machine so it can extract some context out of the training data. This is also the first basic things in our parenting, our babies first understand the language then we start giving complex task gradually.

In the conventional world, we need to nurture each baby individually but on other hand if you take example of of any subject like Physics, a lot of people contributed so far and we have predefined ecosystem like books, universities to pass the earned knowledge to next person. Our conventional NLP language model was similar like this only, everyone needs to develop their own language understanding using some technique but no one can leverage others work. The computer vision division of AI, already achieved this using their ImageNet object data set. This concept is called Transfer Learning. As per Wikipedia

Transfer learning (TL) is a research problem in machine learning (ML) that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem. For example, knowledge gained while learning to recognize cars could apply when trying to recognize trucks.

To reduce a lot of repetitive time intensive, cost unfriendly and compute intensive task, A lot of major companies was working on such language model, where someone can leverage their langua ge understanding but BERT from Google is major defining moment, which almost changed this industry. before that the popular one was ELMo and GPT.

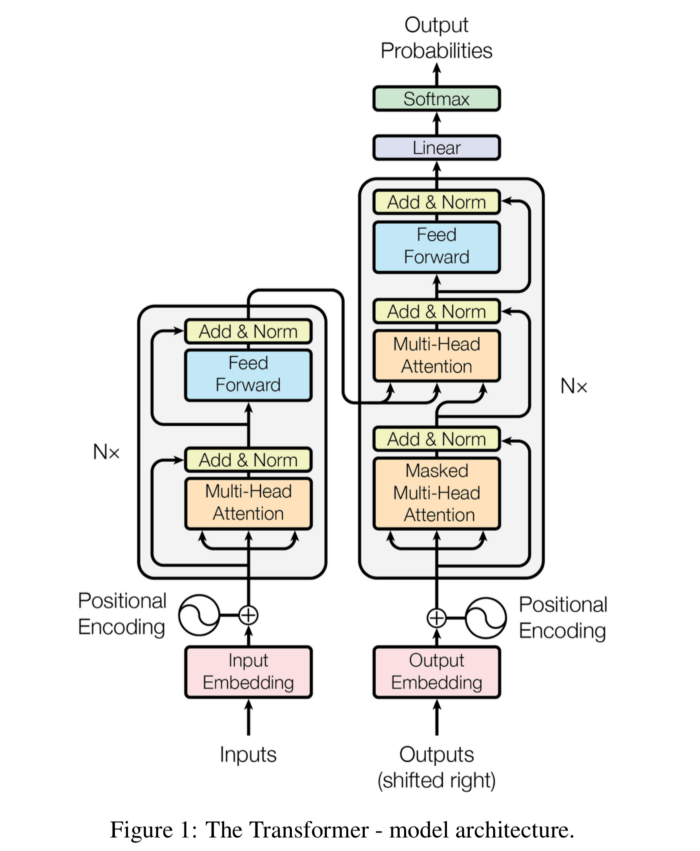

Before going to these language model, we must understand few key concepts

**Embedding **we know that most of our algorithms can’t understand native languages and we need to provide some numerical representation and embedding is doing the same, making different numerical representations of the same text. It can be simple one like count based embedding like TF-IDF, prediction based or context based. Here we are only focused on context based.

**No of Parameters for Neural Network **All our language model use this term as a performance metric and more number of parameters is generally assumed more accurate one. It is typically the weights of the connections or parameters are learned during the training stage.

#machine-learning #deep-learning #transformers #artificial-intelligence #nlp #deep learning