What are overfitting and underfitting? it frequently comes when you are training and testing a machine learning model or a deep learning model. If you are going to build a well-generalized model then it should not be gives overfitting and underfitting situations.

What is a well-generalized model? It means your model has trained well and also performs well to your unseen data (testing data). So a well-generalized model gives less training error and less testing error compared to overfitting and underfitting models.

When talking about overfitting and underfitting, it has some basic terms which are needed to be understood to get a clear idea about the whole thing

- Signal = This refers to data that help to identify general patterns to a model

- Noise = This refers to data which are having some special cases for the particular object in the dataset.

As an example, if we want to predict an animal as a bird or not we know having feathers is a common feature for a bird there for it is a signal. but having a high weight is not a common feature (ostrich). therefore a model has to especially noticed that feature and it is not following the common features set. it calls as noise.

3. Bias = This refers to the error. It means a model not perform well and the predicted value and true value have a huge difference.

4. Variance = This refers to the variability of model output. this is what happens when a model performs well for training data but poorly performs for testing data.

Overfitting

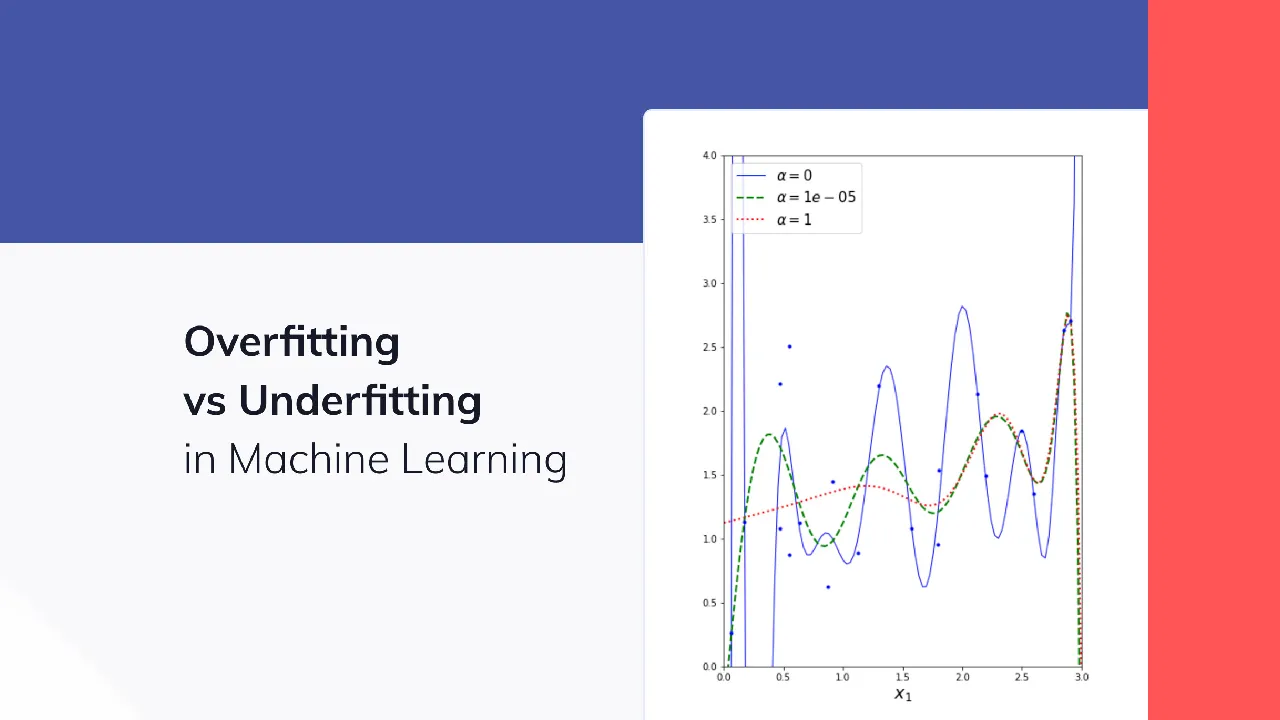

Overfitting mainly happens when model complexity is higher than the data complexity. it means that model has already captured the common patterns and also it has captured noises too. It is like that model has covered all of the data points exactly even it has not avoided inaccurate data points in dataset.

#overfitting #bias-in-ai #deep-learning #machine-learning #underfitting