Graph Neural Networks (GNN) have caught my attention lately. I have encountered several Machine Learning/Deep Learning problems that led me to papers and articles about GNNs. While trying to implement GNNs using Keras, I came across Spektral, a Python library for Graph Neural Networks based on Keras and Tensorflow 2 developed by Daniele Grattarola.

This library really sped up my understanding about GNNs, which is why I want to share some of my findings with everyone! In this article I would mainly touch on some basic theory and how to translate graphs into features that can be used by neural networks and some other applications of GNNs.

Basic Graph Theory

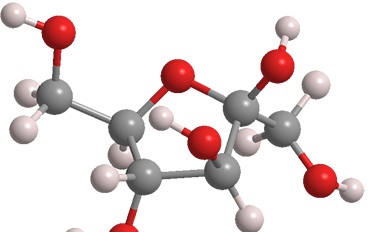

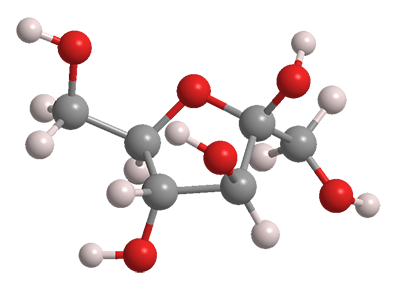

Let’s start from the beginning — basic graph theory. Nowadays, a lot of information are represented in graphs. For example Google’s Knowledge Graph that helps with the Search Engine Optimization (SEO), chemical molecular structure, document citation networks (document A has cited document B), and social media networks (who is connected to who?). A graph consists of 2 main elements, nodes (vertices or points) and edges (links or lines) where the nodes are connected by edges.

Now comes the next question, which part of the data are nodes, and which one are edges? There is no strict answer to this as we should define nodes and edges ourselves. For example, in a chemical molecule that consists multiple atoms, the atoms can be defined as nodes and the bond between atoms can be defined as edges.

Another example is document citation networks from the CORA dataset. The nodes represent individual document and each edge represents whether that document is cited by the other.

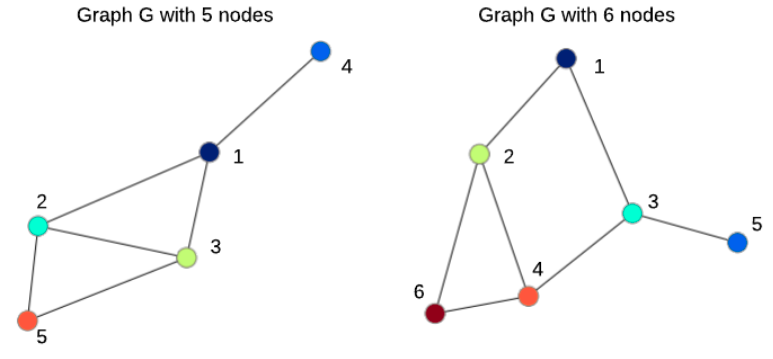

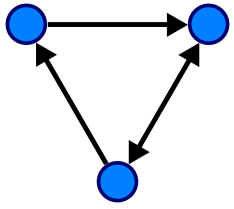

How the edges link the nodes allows us to distinguish between a directed vs an undirected graph. Simply put, in a directed graph, direction matters, and edges cannot be used in the other direction. Undirected graphs behave in the opposite manner, the edges follow no direction and can be used interchangeably.

Undirected Graph with (left) 5 nodes and (right) 6 nodes, via source

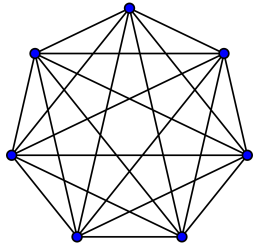

Besides that, there is also a special type of graph where each node is connected to all other nodes, this is called a complete graph.

Now that we have a brief understanding about graph theory, time to see how it can be used as features to train our very own neural network.

Translating Graph into Features for Neural Networks

Every time I googled about graphs, I would get images of how graphs look like, just like the images above. But, it had never been able to answer the biggest question I had, how can those graphs be shaped into features to be fed into the Neural Networks?

#artificial-intelligence #graph-neural-networks #neural-networks #machine-learning