Tips and tricks to debug your neural network

View interactive report here. All the code is available here.

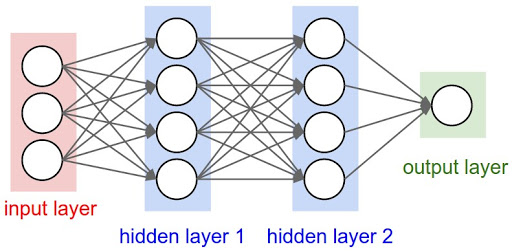

In this post, we’ll see what makes a neural network under perform and ways we can debug this by visualizing the gradients and other parameters associated with model training. We’ll also discuss the problem of vanishing and exploding gradients and methods to overcome them.Finally, we’ll see why proper weight initialization is useful, how to do it correctly, and dive into how regularization methods like dropout and batch normalization affect model performance.

Where do neural network bugs come from?

As shown in this piece, neural network bugs are really hard to catch because:1. The code never crashes, raises an exception, or even slows down.

2. The network still trains and the loss will still go down.

3. The values converge after a few hours, but to really poor resultsI highly recommend reading A Recipe for Training Neural Networks by Andrej Karparthy if you’d like to dive deeper into this topic.There is no decisive set of steps to be followed while debugging neural networks. But here is a list of concepts that, if implemented properly, can help debug your neural networks.

So how can we debug our neural networks better?

There is no decisive set of steps to be followed while debugging neural networks. But here is a list of concepts that, if implemented properly, can help debug your neural networks.

Model Inputs

1. Decisions about data:We must understand the nuances of data — the type of data, the way it is stored, class balances for targets and features, value scale consistency of data, etc.2. Data Preprocessing: We must think about data preprocessing and try to incorporate domain knowledge into it. There are usually two occasions when data preprocessing is used:

- Data cleaning: The objective task can be achieved easily if some parts of the data, known as artifacts, is removed.Data augmentation: When we have limited training data, we transform each data sample in numerous ways to be used for training the model (example scaling, shifting, rotating images).

- This post is not focusing on the issues caused by bad data preprocessing.

3. Overfitting on a small dataset: If we have a small dataset of 50–60 data samples, the model will overfit quickly i.e., the loss will be zero in 2–5 epochs. To overcome this, be sure to remove any regularization from the model. If your model is not overfitting, it might be because might be your model is not architected correctly or the choice of your loss is incorrect. Maybe your output layer is activated with sigmoid while you were trying to do multi-class classification. These errors can be easy to miss error. Check out my notebook demonstrating this [here](https://github.com/ayulockin/debugNNwithWandB/blob/master/MNIST_pytorch_ wandb Overfit Small.ipynb).So how can one avoid such errors? Keep reading.

#production-ml #wandb #pytorch #w&b #neural networks