English is one of the languages which has lots of training data for translation while some language may not has enough data to train a machine translation model. Sennrich et al. used the back-translation method to generate more training data to improve translation model performance.

Given that we want to train a model for translating English (source language) → Cantonese (target language) and there is not enough training data for Cantonese. Back-translation is translating target language to source language and mixing both original source sentences and back-translated sentences to train a model. So the number of training data from the source language to target language can be increased.

In the previous story, the back-translation method is mentioned to generate synthetic data for the NLP task. Such that, we can have more training for model training especially for low-resource NLP tasks and languages.

This story will cover how Facebook AI Research (FAIR) team trains a model for translation and how can we leverage the pre-trained model to generate more training data for your model. By leveraging subword models, large-scale back-translation, and model ensembling, Ng et al. (2019) win WMT 19 award. They worked on two language pairs and four language directions which are translating English ← → Germany (EN ← → DE) and English ← →Russian (EN ← →RU). They demonstrated how to use back-translation to boost up model performance. After that, I will show how can we write a few lines to generate synthetic data by using back-translation. Here are some details about data processing, data augmentation, and translation model.

Data Processing

Subword

In the earlier stage of NLP, word level and character level tokens are used to train a model. In the state-of-the-art NLP model, the sub-word (in between a word and character level) is the standard way in the tokenization stage. For example, it uses “trans” and “lation” to represent “translation” because of occurrence frequency. You may have a look at 3 different sub-word algorithms from here. Ng et al. pick bye pair encodings (BPE) with 32K and 24 split operations for EN←→DE and EN← →RU tokenization respectively.

Data Filtering

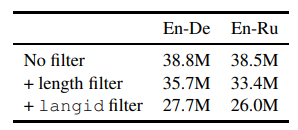

To make sure only sentence pairs with the correct language, Ng et al. use langid (Lui et al., 2012) to filter out invalid data. langid is a language identification tool that tells you what language does text belongs to.

If sentences contain more than 250 tokens or length ratio between source and target exceeding 1.5, it will be excluded in model training. I suspected that it may introduce too much noise information to the model.

The data size for different filtering methods (Ng et al., 2019)

The third filtering way is targeting monolingual data. To keep high-quality monolingual data, Ng et al. adopt the Moore-Lewis method (2010) for removing noisy data from the larger corpus. In short, Moore and Lewis score text by the difference of source data language model and the larger corpus language model. After picking a high-quality corpus, it will use the back-translation model to generate a pair of training data for the translation model.

#back-translation #data-augmentation #nlp #machine-learning #data-science