This report has been prepared by Somayeh Gholami and Mehran Kazeminia.

Currently, Machine learning techniques can only use the patterns that they have already seen. It means that initially certain patterns are set for the machines and then they are exposed to the pertinent data so that they can learn new skills. But like humans, could machine in the future answer the reasoning questions they have never seen before? Could machines learn complex and abstract tasks just from a few examples? This was exactly the theme of the recent abstraction and reasoning challenge, which terminated recently, and it’s one of Kaggle’s most controversial challenges. In this challenge, participants were asked to develop artificial intelligence, within three months, which can solve reasoning questions that they had not seen before. Introducing this contest, Kaggle wrote:

“It provides a glimpse of a future where AI could quickly learn to solve new problems on its own. The Kaggle Abstraction and Reasoning Challenge invites you to try your hand at bringing this future into the present!”

The reasoning questions of this challenge were like the intelligence tests for humans and included simple, medium, and sometimes rather difficult questions. Of course, an ordinary human was able to answer all the questions within an adequate time, and none of the questions were extremely complex. But the challenge was how to train machines all reasoning concepts like; the color change, resize, change the order, etc, to enable them to pass a human intelligence test which they have never been seen before.

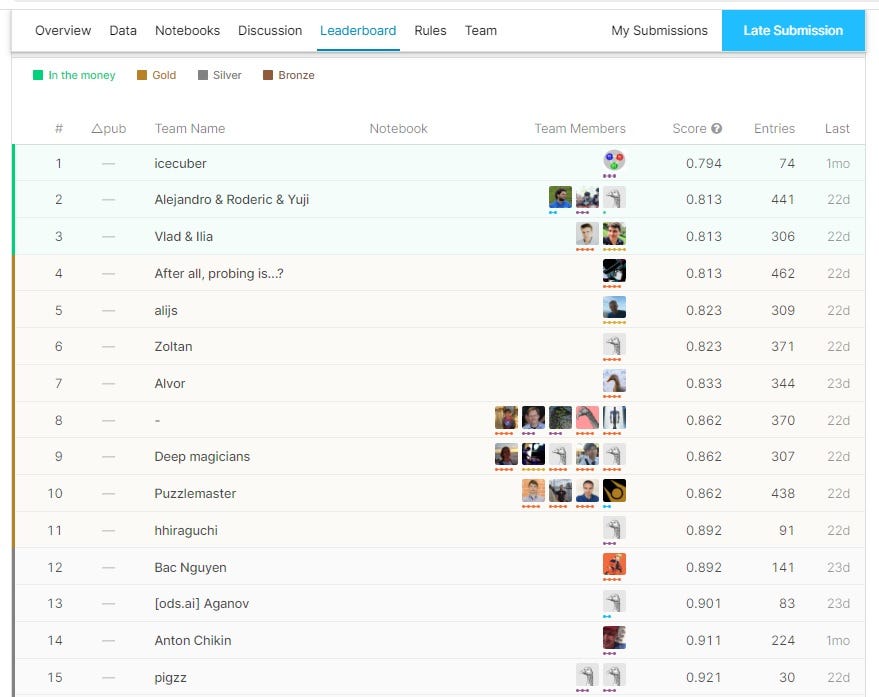

The prize for this match was a total of twenty thousand dollars, which was divided between the first three (first three teams). But as guessed; Even the results of whom at the top of the list were not promising. The challenge involved nearly a thousand participants, half of whom did not answer any of the questions correctly. If a team’s algorithm did not work at all, it would get a score of one, and if it could answer a few questions correctly, for example, it would get a score of Ninety-eight hundredths or…. However, only twelve teams were able to score less than 0.90. The following is the final table of match scores for on the top thirty.

#reasoning #machine-learning #abstraction #measure-of-intelligence #kaggle-competition