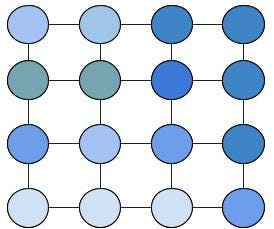

This blog post is a summarized version of GCNNs from the paper “From Graph Filters to Graph Neural Networks”. Graphs are structures that are used to link different entities that we call nodes using relationships called edges. Graphs exist everywhere from bonds between the atoms to friends on Facebook, all these scenarios can be represented as a graph. Thus we can say that graphs are pervasive models of structure. But we are good at running CNNs, convolutional neural networks, on structured graphs like images.

Fig1. Images as a Structured graph

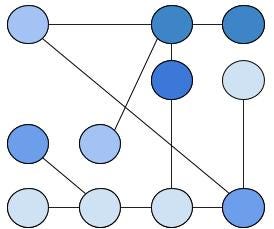

While actual graphs that we want to run Neural Network over, look like these.

Fir2. Unstructured Graph. Real ones are more complex.

Graph Neural Networks are the tool of choice for performing machine learning on graphs. So we need to generalize convolutions on graphs to create a layered Graph (convolutional) neural network.

Convolutions on Graphs

We know the convolutions of signals in time and space (images). These two can be imagined as structured graphs, i.e a signal in time can be visualized as a single list like a graph structure while an image as shown in the image above (Fig 1). Let me define the convolution in the matrix form for a single list like the graph. It is equal to:

Convolution output

where S¹ is a shift by 1, S² is a shift by two and so on, h(i) is the filter coefficient and x is our signal. Observe here, how the matrix convolution is just a product with the shifted signal. A similar observation is seen for images convolution (Yes it is the same as in the link given). Similarly, convolution in graphs can also be written in the same form where S is the adjacency matrix.

#graph-filters #algorithms #ai #neural-networks #graph-convolution