In my previous article, I discussed and went through a working python example of Gradient Boosting for Regression. In this article, I would like to discuss how Gradient Boosting works for Classification. If you did not read that article, it’s all right because I will reiterate what I discussed in the previous article anyway. So, let’s get started!

Ensemble Methods

Usually, you may have a few good predictors, and you would like to use them all, instead of painfully choosing one because it has a 0.0001 accuracy increase. In comes _Ensemble Learning. _In Ensemble Learning, instead of using a single predictor, multiple predictors and training in the data and their results are aggregated, usually giving a better score than using a single model. A Random Forest, for instance, is simply an ensemble of bagged(or pasted) Decision Trees.

You can think of Ensemble Methods as an orchestra; instead of just having one person play an instrument, multiple people play different instruments, and by combining all the musical groups, the music sound generally better than it would if it was played by a single person.

_While Gradient Boosting is an Ensemble Learning method, it is more specifically a _Boosting Technique. So, what’s Boosting?

Boosting

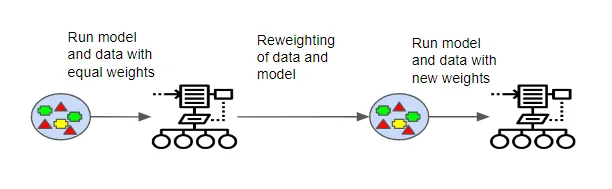

_Boosting _is a special type of Ensemble Learning technique that works by combining several _weak learners(predictors with poor accuracy) _into a strong learner(a model with strong accuracy). This works by each model paying attention to its predecessor’s mistakes.

The two most popular boosting methods are:

- Adaptive Boosting(you can read my article about it here)

- Gradient Boosting

We will be discussing Gradient Boosting.

#machine-learning #artificial-intelligence #beginners-guide #algorithms #python