This post is the second of three sequential articles on steps to build a sentiment classifier. Following our exploratory text analysis in the first post, it’s time to preprocess our text data. Simply put, preprocessing text data is to do a series of operations to convert the text into a tabular numeric data. In this post, we will look at 3 ways with varying complexity to preprocess text to _tf-idf _matrix as preparation for a model. If you are unsure what tf-idf is, this post explains with a simple example.

Photo by Domenico Loia on Unsplash

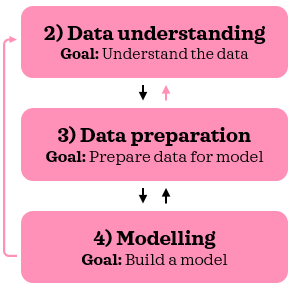

Before we dive in, let’s take a step back and look at the bigger picture real quickly. CRISP-DM methodology outlines the process flow for a successful data science project. _Preprocessing data _is one of the key tasks in the **data preparation **stage.

Extract from CRISP-DM process flow

0. Python setup

This post assumes that the reader (👀 yes, you!) has access to and is familiar with Python including installing packages, defining functions and other basic tasks. If you are new to Python, this is a good place to get started.

I have tested the scripts in Python 3.7.1 in Jupyter Notebook.

Let’s make sure you have the following libraries installed before we start:

◼️ Data manipulation/analysis: numpy, pandas

◼️ Data partitioning: sklearn

◼️ Text preprocessing/analysis: nltk

◼️ Spelling checker: spellchecker (pyspellchecker when installing_)_

Once you have _nltk _installed, please make sure you have downloaded ‘stopwords’ and ‘wordnet’ corpora from _nltk _with the script below:

#text-preprocessing #applied-text-mining #python #data-science #nlp