In this tutorial, we would look at how we can scrape dynamic web pages. Compared to Static web pages, Dynamic web pages are pages that pull data from databases using Javascript and information change occurs frequently.

If you tried scraping a Static web page using Rvest, you might not be able to obtain dynamic information which is created via Javascript on a website. As such, we can use an additional library RSelenium which would allow us to overcome this issue.

To begin, we can install and load the RSelenium library together with the other libraries we previously used for Static web pages.

![Image for post]

The RSelenium library allows us to open a browser for which we can pass commands to obtain the desired information. In this example, I would be using the Google Chrome browser.

We would first start a Selenium server and browser via the** rsDriver() **function. We would then proceed to set our browser type with the port number we choose to run on.

For more information kindly check out the CRAN Package: https://cran.r-project.org/web/packages/RSelenium/RSelenium.pdf.

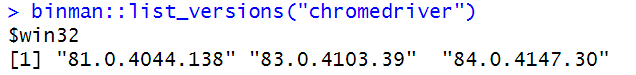

At times, there might be issues loading up a Selenium server and browser due to Google Chrome’s version. As such, an excellent way to find a workable Selenium version is first to check the versions currently sourced by using the binman::list_versions(“chromedriver”) function.

#computer-science #machine-learning #programming #data-science #web-scraping