Data is the blood of a business. Data comes in varying shapes and sizes, making it a constant challenging task to find the means of processing and consumption, without which it holds no value whatsoever. This article looks at how to leverage Apache Spark’s parallel analytics capabilities to iteratively cleanse and transform schema drifted CSV files into queryable relational data to store in a data warehouse. We will work in a Spark environment and write code in PySpark to achieve our transformation goal.

Disclaimer and Terms of use

Please read our terms of use before proceeding with this article.

Caution

Microsoft Azure is a paid service, and following this article can cause financial liability to you or your organization.

Prerequisites

1. An active Microsoft Azure subscription

2. Azure Data Lake Storage Gen2 storage with CSV files

3. Azure Databricks Workspace (Premium Pricing Tier)

4. Azure Synapse Analytics data warehouse

If you don’t have prerequisites set up yet, refer to our previous articles to get started.

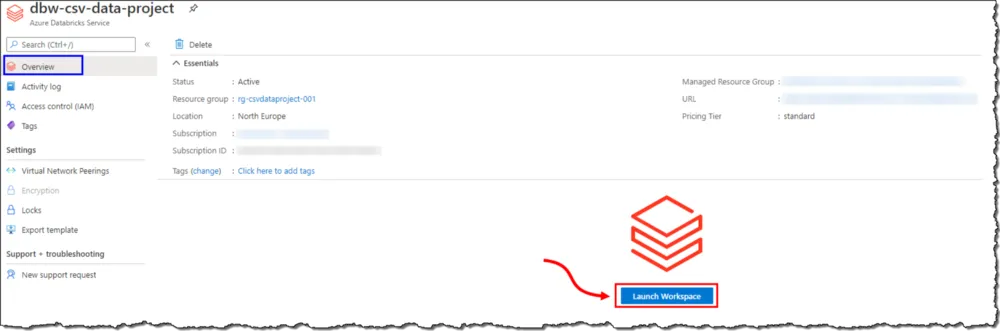

Sign in to the Azure Portal, locate and open your Azure Databricks instance and click on ‘Launch Workspace.’ Our Databricks instance will open up in a new browser tab; wait for Azure AD SSO to sign you in automatically.

#azure-databricks #data-cleansing #pyspark #schema-drift #data-science