It is almost trite at this point for anyone to espouse the potential of machine learning in the medical field. There are a plethora of examples to support this claim — one would be Microsoft’s use of medical imaging data in helping clinicians and radiologists make an accurate cancer diagnosis. Simultaneously, the development of sophisticated AI algorithms has drastically improved the accuracy of such diagnoses. Undoubtedly, such amazing applications of medical data and one has all the good reasons to be excited about its benefits.

However, such cutting-edge algorithms are black boxes that might be difficult, if not impossible, to interpret. One example of a black-box model is the deep neural network, where a single decision is made after the input data passes through millions of neurons in the network. Such black-box models do not allow clinicians to verify the models’ diagnosis with their prior knowledge and experiences, making the model-based diagnosis less trustworthy.

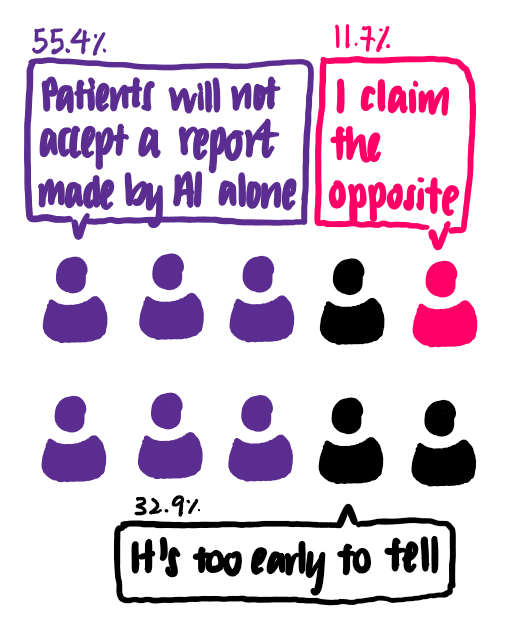

In fact, a recent survey of radiologists in Europe paints a realistic picture of the use of black-box models in radiology. The survey shows that only 55.4% of the clinicians think that patients will not accept purely AI-based application without the supervision of a physician. [1]

#explainable-ai #cancer #machine-learning #interpretability #data-science