This lesson is about how to prepare data and transform it into trained machine learning models. This lesson will also introduce you to ensemble learning and automated machine learning.

Data Import & Transformation

Data Wrangling:

Cleaning, restructuring, enriching data to transform it into a suitable format for training machine learning models. This is an iterative process.

Steps:

- Discovery & Exploration of data

- Transformation of raw data

- Publish data

Managing Data:

Datastores:

A layer of abstraction. It stores all the information needed to connect to a particular storage device

Datasets:

Resources for exploring, transforming, managing data. A reference to a point in storage.

Data Access Workflow:

- Create a datastore

- Create a dataset

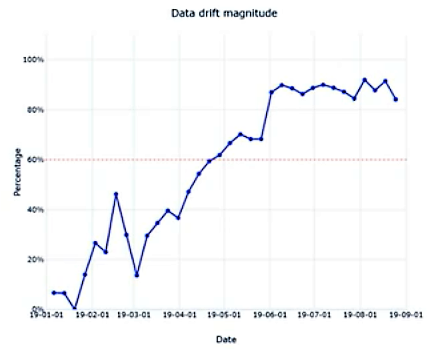

- Create a dataset monitor (critical to detecting issues in data, e.g. Data Drift)

Introducing Features

- Feature Selection

- Dimensionality Reduction

Feature Engineering

- Core techniques

- Various places to apply feature engineering: 1) datastores 2) a python library 3) during model training

- Used more often in classical machine learning

Feature Engineering Tasks:

- Aggregation: sum, mean, count, median, etc (math formulas)

- Part-of: extract a part of a certain data structure, e.g. a part of date like hour

- Binning: group entities into bins and apply aggregations on them

- Flagging: deriving Boolean conditions

- Frequency-based: calculate the various frequencies of occurrence of data

- **Embedding or Feature Learning: **a relatively low-dimensional space into which you can translate high-dimensional vectors

- Deriving by Example: aim to learn values of new features using examples of existing features

Feature Selection

Given an initial dataset, create a number of new features. Find out useful and filter out features.

Reasons for Feature Selection:

1. Eliminate redundant, irrelevant, or highly correlated feature

2. Dimensionality reduction

Dimensionality Reduction Algorithms:

- **Principle Component Analysis (PCA): **Linear technique with more of a statistical approach.

- **t-Distributed Stochastic Neighboring Entities (t-SNE): **A probabilities approach to reduce dimensionality. The target number of dimensions with t-SNE is usually 2–3 dimensions. It is used a lot for visualizing data.

- **Feature Embedding: **Train a separate machine learning model to encode a large number of features into a smaller number of features also super-features.

All the above are also considered to be Feature Learning techniques.

Azure Machine Learning Prebuilt Modules Available:

- **Filter-Based Feature Selection: **Helps identify the columns in input dataset that have a dataset that has the greatest predictive power

- **Permutation Feature Importance: **Helps determine the best features to use in a model by computing a set of feature-important-scores for your dataset

#deep-learning #azure #microsoft #machine-learning #udacity