Why Kaggle?

I started using Kaggle seriously a couple of months ago when I joined the SIIM-ISIC Melanoma Classification Competition.

The initial reason, I think, was that I wanted a serious way to test my Machine Learning (ML) and Deep Learning (DL) skills. At the time, I was studying for the Coursera AI4Medicine Specialization and I was intrigued (I’m still) by what can be realized by applying DL to Medicine. I was also reading the beautiful book by Eric Topol: Deep Medicine, which is full of interesting ideas on what could be done.

I had opened my Kaggle account several years ago, but haven’t done yet anything serious. Then, I discovered the Melanoma Challenge and it seemed to be a really good way to start working on a difficult task, with real data.

Therefore, I started working on the competition and I was caught in the game. I thought it was easier.

A summary of what I have learned.

The first thing that I learned to master has been how to efficiently read many images, without having the GPU (or TPU) to wait.

In fact, in the beginning, I was trying to train one of my first models on a 2-GPU machine, and the training seemed too slow. The GPU utilization was really low, about 20%. Why?

Because I was using Keras ImageDataGenerator, reading images from directories. Reading several discussions on Kaggle (yes, this is an important suggestion: read the discussions) I discovered that a very efficient way is to pack images (eventually pre-processed, resized) in files in TFRecord format. This way I have been able to** bring GPU utilization to higher 90s.**

Yes, I know that things are going to improve with the preprocessing and data loading capabilities coming with TF 2.3 (see: ImageDatasetfromDirectory) but if you need to do massive image (or data) pre-processing then you should consider packaging results in TFRecord format.

The second important thing is to use a modern pre-trained ConvNet.

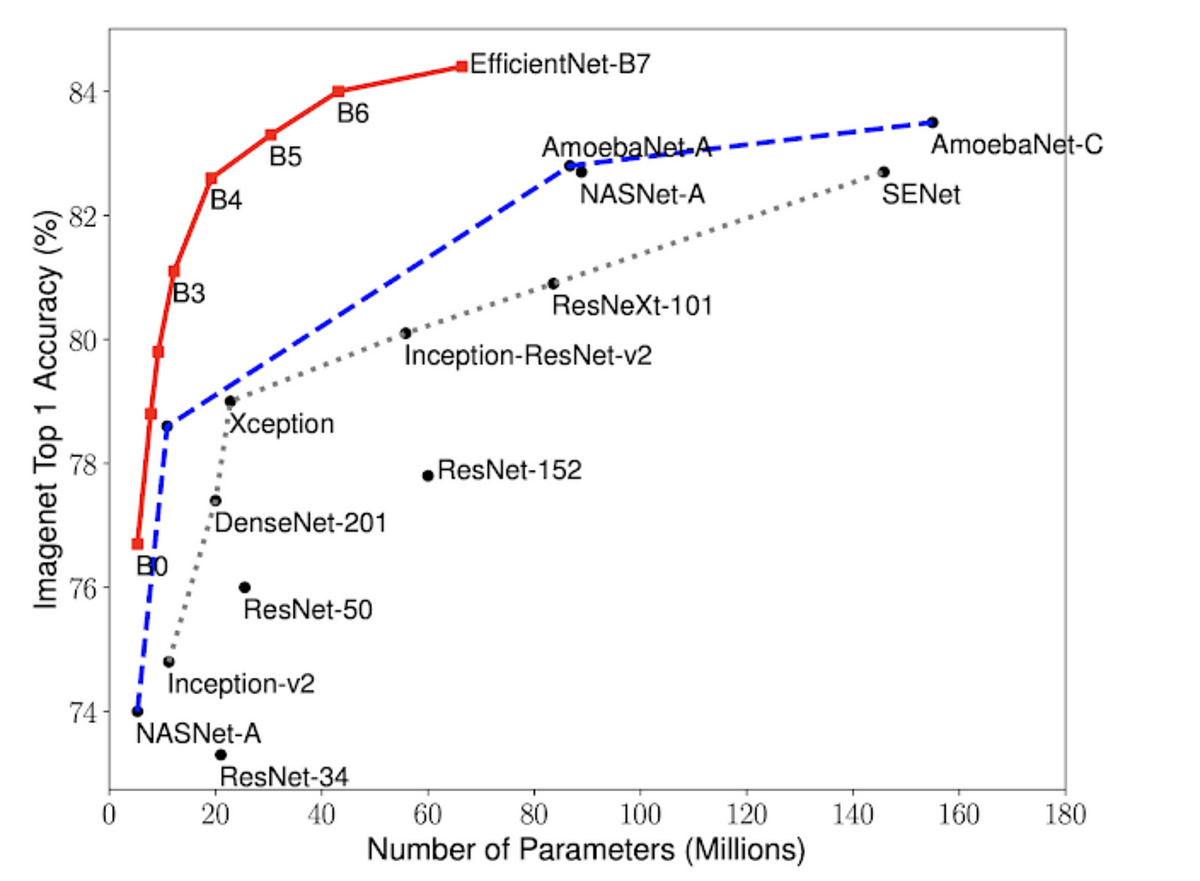

Again, reading from Kaggle’s discussions I discovered the family of EfficientNets. These are convolutional networks, pre-trained on Imagenet, proposed by Google researchers in 2019. These CNN are very efficient and you can achieve high accuracy with less computational power if compared to old CNN. It is surprising the increase in accuracy that you can achieve simply using an EfficientNet as a convolutional layer (feature extractor).

#medicine #deep-learning #kaggle #machine-learning