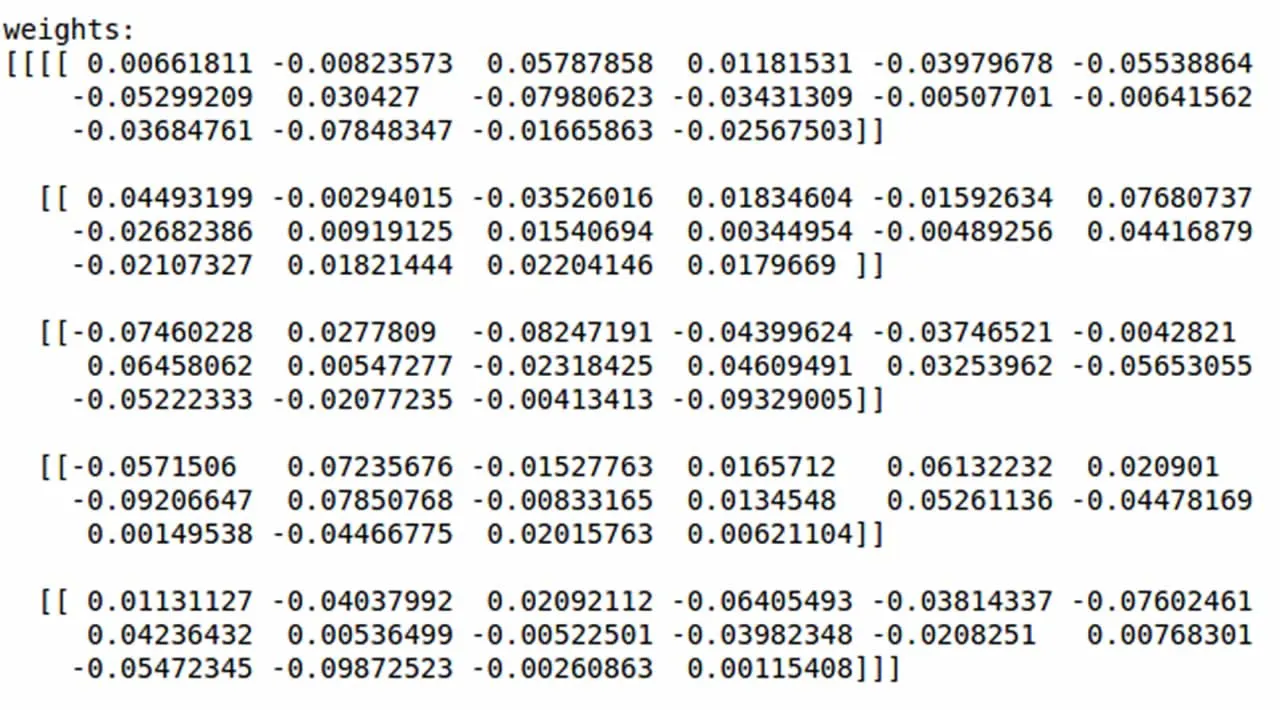

Initialising the parameters with right values is one of the most important conditions for getting accurate results from a neural network.

Initialisation of weights with all values zero

If all the weights are initialized with zero, the derivative with respect to loss function is the same for every w in W[l] where W[l] are weights in the layer l of neural net, thus all weights have the same value in subsequent iterations. This makes hidden units symmetric and continues for all the n iterations i.e. setting weights to zero does not make it better than a linear model, hence we should not initialise it with zeroes.

#neural-networks #weight-initialization #pytorch #cnn

2.25 GEEK