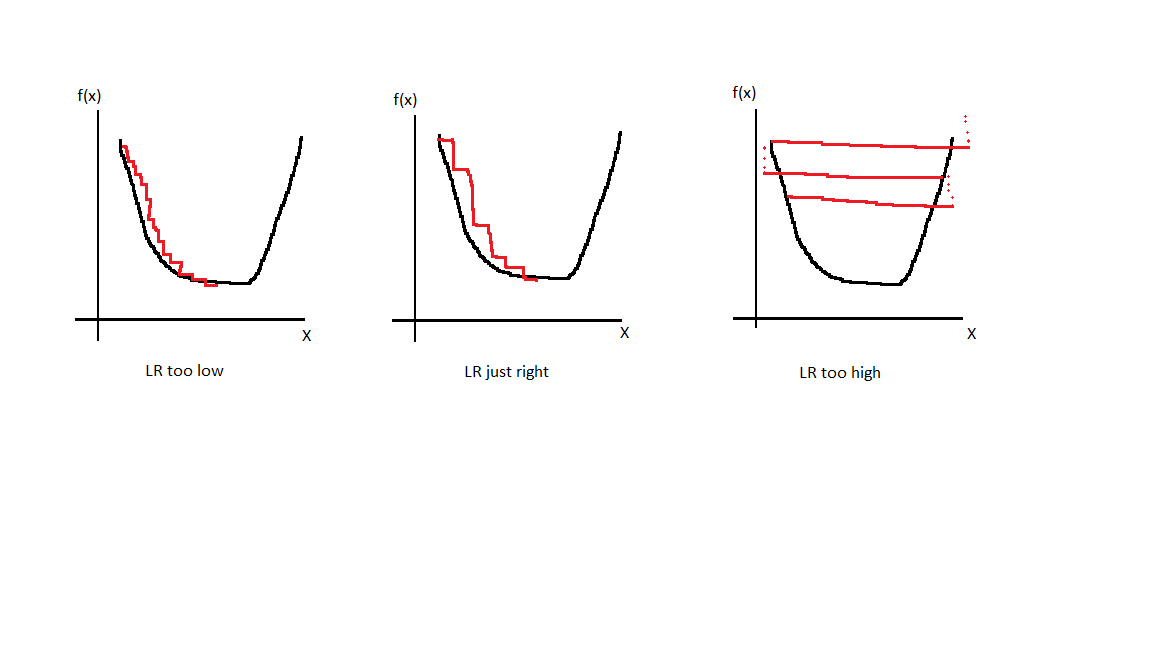

This episode: Learning rate (LR)

LR before fastai

The general consensus on finding the best LR was usually to train a model fully, until the desired metric was achieved, with different optimizers at different LRs. The optimal LR and optimizer are picked depending on what combination of them worked best in the picking phase. This is an ok technique, although computationally expensive.

Note: As I was introduced early in my deep learning career to fastai, I do not know a lot about how things are done without/before fastai, so please let me know if this was a bit inaccurate, also take this section with a grain of salt.

The fastai way

The fastai way to LRs is influenced by Leslie Smith’s Paper [1]. There are mainly 3 components to finding the best LR, find an optimal LR for training (explained in LR find section), As training progresses, reduce the LR (explained in LR annealing section), and a few caveats for transfer learning (explained in discriminative LR) and one cycle training (part of LR annealing).

#machine-learning #fastai #learning-rate #deep-learning