History

Airflow was born out of Airbnb’s problem of dealing with large amounts of data that was being used in a variety of jobs. To speed up the end-to-end process, Airflow was created to quickly author, iterate on, and monitor batch data pipelines. Airflow later joined Apache.

The Platform

Apache Airflow is a platform for programmatically authoring, scheduling, and monitoring workflows. It is completely open-source with wide community support.

Airflow as an ETL Tool

It is written in Python so we are able to interact with any third party Python API to build the workflow. It is based on an ETL flow-extract, transform, load but at the same time believing that ETL steps are best expressed as code. As a result, Airflow provides much more customisable features compared to other ETL tools, which are mostly user-interface heavy.

Apache Airflow is suited to tasks ranging from pinging specific API endpoints to data transformation to monitoring.

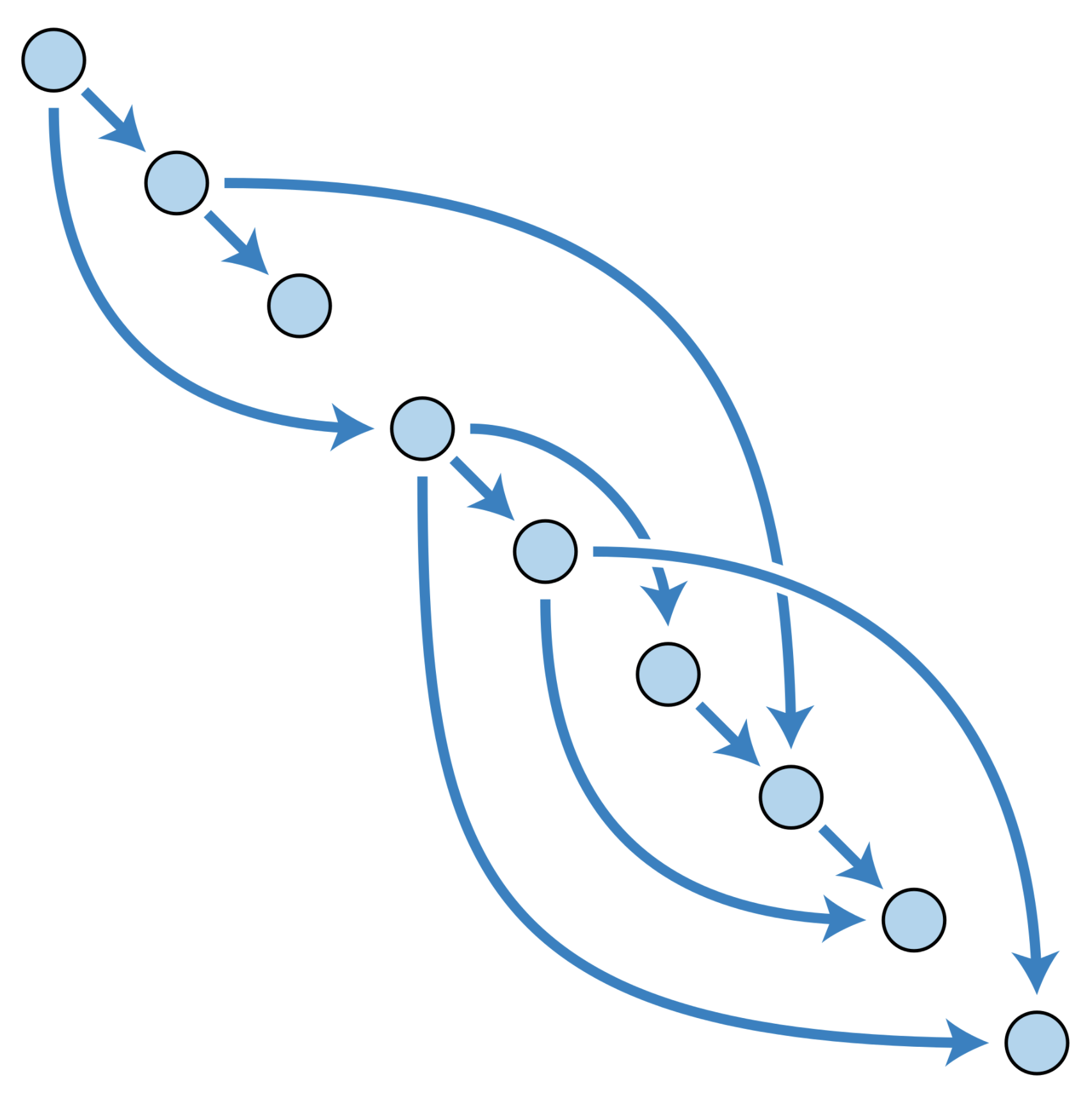

Directed Acyclic Graph

#python #workflow #graph #airflow #apache-airflow