Different approaches to solve linear regression models

There are many different methods that we can apply to our linear regression model in order to make it more efficient. But we will discuss the most common of them here.

- Gradient Descent

- Least Square Method / Normal Equation Method

- Adams Method

- Singular Value Decomposition (SVD)

Okay, so let’s begin…

Gradient Descent

One of the most common and easiest methods for beginners to solve linear regression problems is gradient descent.

How Gradient Descent works

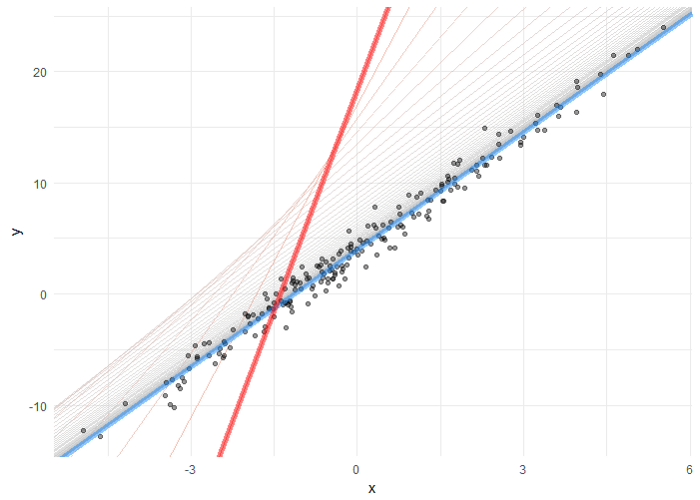

Now, let’s suppose we have our data plotted out in the form of a scatter graph, and when we apply a cost function to it, our model will make a prediction. Now this prediction can be very good, or it can be far away from our ideal prediction (meaning its cost will be high). So, in order to minimize that cost (error), we apply gradient descent to it.

Now, gradient descent will slowly converge our hypothesis towards a global minimum, where the cost would be lowest. In doing so, we have to manually set the value of **alpha, **and the slope of the hypothesis changes with respect to our alpha’s value. If the value of alpha is large, then it will take big steps. Otherwise, in the case of small alpha, our hypothesis would converge slowly and through small baby steps.

Hypothesis converging towards a global minimum. Image from Medium.

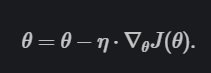

The Equation for Gradient Descent is

#2020 sep tutorials # overviews #gradient descent #linear regression #numpy #python #statistics #svd