When it comes to innovation, we realized that the world’s most valuable resource is no longer oil, but data. One of the big challenges we’re having today is how to integrate disparate data sources from many different places. If you’re looking into how to work on Big Data Analytics solutions in Kubernetes, that’s where Big Data Clusters (BDC) comes in.

SQL Server Big Data Clusters (BDC) is a cloud-native, platform-agnostic, open data platform for analytics at any scale orchestrated by Kubernetes, it unites SQL Server with Apache Spark to deliver the best data analytics and machine learning experience, easy to use deployment.

Now, you are maybe thinking you misunderstood what you just read. No, let me be clear about that. With Big Data Cluster you run SQL Server in Kubernetes, along with Apache Spark, to get the best of both platforms. You’re not dreaming, and yes, this opens a huge universe of opportunities for developers. Read on!

SQL Server on Kubernetes. With Apache Spark. And more.

Kubernetes is the most popular open-source container orchestrator today in the community, it has gained traction incredibly quickly and keeps growing every day. Community makes it even greater with the help of the whole cloud-native ecosystem to resolve the challenges around security, compliance, networking, monitoring, and more whilst working with Kubernetes.

From those developers are working with Kubernetes for their various workloads built up in microservices-based architecture or better saying as of today as correctly-sized services-based architecture since some customers found it’s becoming more and more struggling to maintain overloaded microservices in the enterprise environment. How to consume big data from different data sources in an effective and efficient way is coming into question.

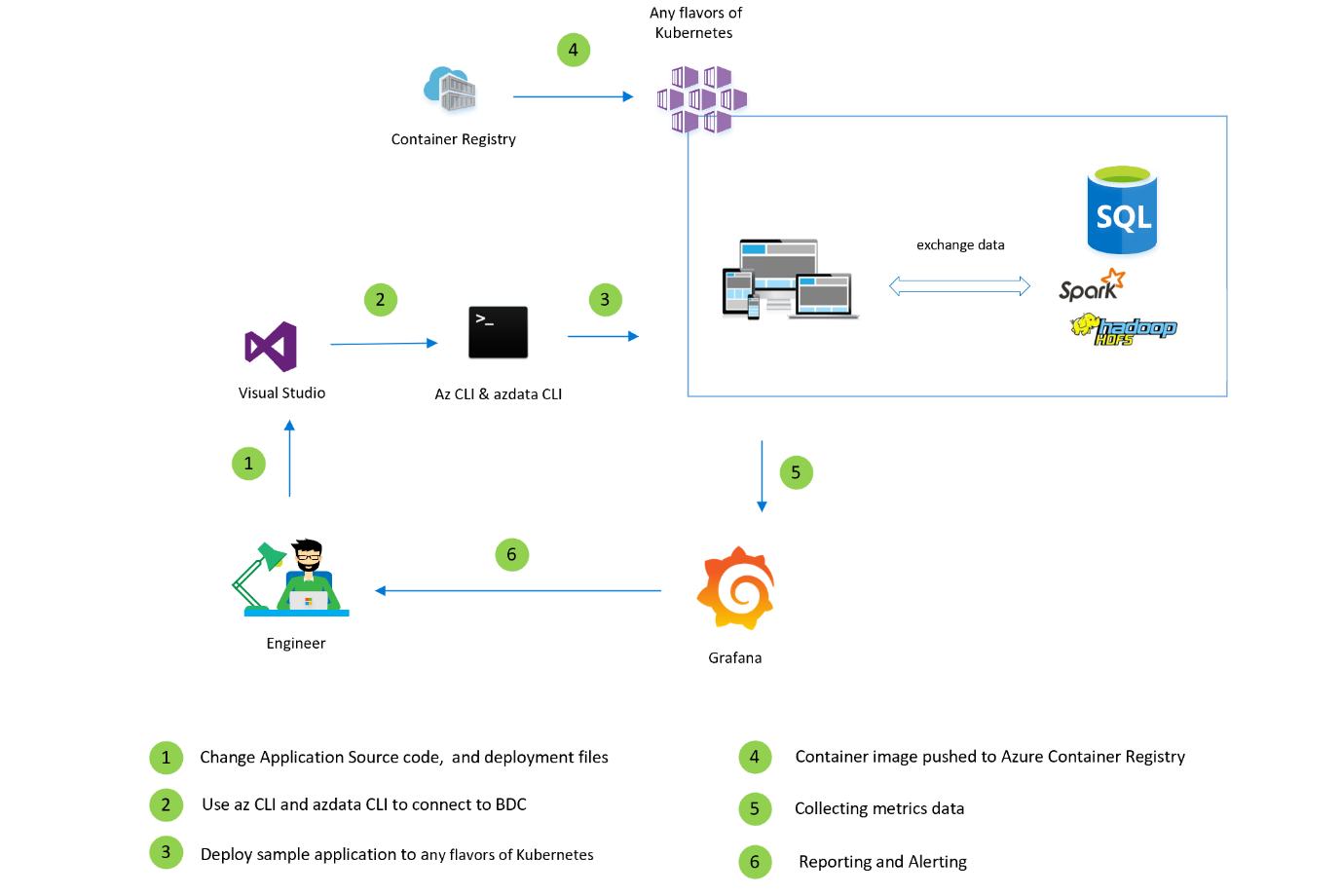

The challenge in question can be addressed easily by SQL Server Big Data Clusters (BDC) deployment in various flavors of Kubernetes, for example: on Azure Kubernetes Services or Red Hat OpenShift. Once you deployed BDC in your Kubernetes cluster, you can interact with your high-volume big data with TSQL or Spark. Your application will be able to consume data from external SQL Server, Oracle, Teradata, MongoDB, etc, any big data stored in HDFS. Also, since your SQL Server instance sits in the cluster, there are dramatically fewer concerns around latency and throughput. BDC is not only a big data analytics platform but also an integrated AI and machine learning platform, it enables AI and machine learning tasks on the data stored in HDFS and SQL Server.

Your modern cloud-native application is not only up and running platform agnostically, take advantage of all-natural benefits from Kubernetes for its high availability, scalability, and full support from its ecosystem, but also packed with the capability to work on big data analytics and consume the machine learning models.

Developing applications with BDC

What’s in for a developer you may be asking to yourself. This post and the ones will follow, will answer this question. To start let’s focus on how to deploy your very first HelloWorld cloud-native application in BDC. We’ll begin with creating the BDC clusters and get access to it, then explore the key task such as the following in the same blog series:

- Develop and deploy the application: azdata utility provides to create an app skeleton to develop which facilitates meanwhile application deployment in BDC.

- Run and test application: azdata utility provides commands to help you run and test the application in BDC.

- Consume application: You’ll be able to obtain an endpoint with azdata utility and a JWT access token and since Swagger is integrated, you’ll be able to test and run the application with Swagger UI or Postman.

- Monitor application: with Grafana integrated into BDC, you’ll be able to keep monitoring your applications with various metrics.

#azure sql #big data cluster #aks #bdc #kubernetes #data analysis