I recently came across an excellent article from Signal where they introduced a new feature that gives users the ability to automatically blur faces—incredibly useful in a time when protestors and demonstrators need to communicate while protecting their identities.In the article, Signal also hinted at technologies they’re using, which are strictly platform-level libraries. For iOS, I would guess they have used Vision , an API made by Apple to perform a variety of image and video processing.In this article, I’ll use Apple’s native library to create an iOS application that will pixelate faces on any given image.

Overview:

- Why the built-in/on-device solutionCreate Face Detection helpersBuild the iOS applicationResultsConclusion

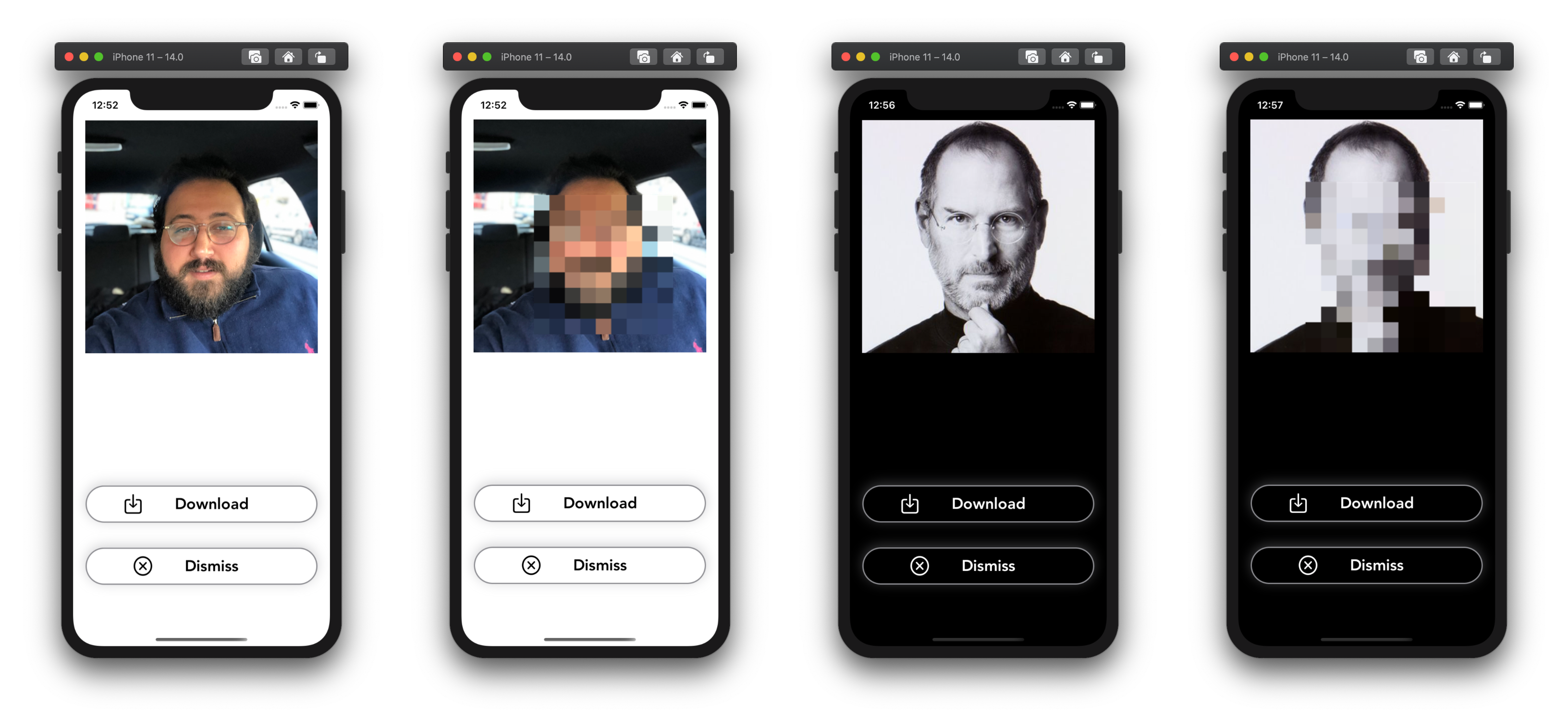

This is a look at the final result:

I have included code in this article where it’s most instructive. Full code and data can be found on my GitHub page. Let’s get started.

Why the built-in/on-device solution

- **On-device: **The most powerful argument for on-device solutions is the latency—the whole process is performed on the phone and doesn’t necessitate communication with an external/remote API. There is also the argument of privacy. Since everything happens on-device, there is no data transferred from the phone to a remote server. A cloud-based API can be risky in terms of an entity being in the middle of the communication, or the service provided being able to literally store images for other reasons than the advertised intent.**Built-in: **They are many ways to use/create on-device models that can be, in some cases, better than Apple’s built-in solution. Google’s ML Kit provides an on-device solution on iOS for Face Detection (in my experience, similar to Apple’s in terms of accuracy), which is free and has more features than Apple’s solution. But for our use case, we just need to detect faces and draw bounding boxes. You can also build your own model using Turi Create’s object detection API or any other framework of your choice. Either way, you still need a huge amount of diverse and annotated data to come even close to Apple’s or Google’s accuracy and performance.

Apple has been active in providing iOS developers with powerful APIs centered on computer vision and even other AI disciplines (i.e. NLP). They have continuously improved them by trying to represent the complex spectrum of use cases,** from gender differences to racial diversity**. I remember the first version of the Face Detection API being very bad at detecting darker skinned faces. They have since improved it, but there is no perfect system so far, and detection is not 100% accurate.

#vision #ios-app-development #heartbeat #swift #computer-vision