Do you have the knowledge and skills to design a mobile gaming analytics platform that collects, stores, and analyzes large amounts of bulk and real-time data?

Well, after reading this article, you will.

I aim to take you from zero to hero in Google Cloud Platform (GCP) in just one article. I will show you how to:

- Get started with a GCP account for free

- Reduce costs in your GCP infrastructure

- Organize your resources

- Automate the creation and configuration of your resources

- Manage operations: logging, monitoring, tracing, and so on.

- Store your data

- Deploy your applications and services

- Create networks in GCP and connect them with your on-premise networks

- Work with Big Data, AI, and Machine Learning

- Secure your resources

Once I have explained all the topics in this list, I will share with you a solution to the system I described.

If you do not understand some parts of it, you can go back to the relevant sections. And if that is not enough, visit the links to the documentation that I have provided.

Are you up for a challenge? I have selected a few questions from old GCP Professional Certification exams. They will test your understanding of the concepts explained in this article.

I recommend trying to solve both the design and the questions on your own, going back to the guide if necessary. Once you have an answer, compare it to the proposed solution.

Try to go beyond what you are reading and ask yourself what would happen if requirement X changed:

- Batch vs streaming data

- Regional vs global solution

- A small number of users vs huge volume of users

- Latency is not a problem vs real-time applications

And any other scenarios you can think of.

At the end of the day, you are not paid just for what you know but for your thought process and the decisions you make. That is why it is vitally important that you exercise this skill.

At the end of the article, I’ll provide more resources and next steps if you want to continue learning about GCP.

How to get started with Google Cloud Platform for free

GCP currently offers a 3 month free trial with $300 US dollars of free credit. You can use it to get started, play around with GCP, and run experiments to decide if it is the right option for you.

You will NOT be charged at the end of your trial. You will be notified and your services will stop running unless you decide to upgrade your plan.

I strongly recommend using this trial to practice. To learn, you have to try things on your own, face problems, break things, and fix them. It doesn’t matter how good this guide is (or the official documentation for that matter) if you do not try things out.

Why would you migrate your services to Google Cloud Platform?

Consuming resources from GCP, like storage or computing power, provides the following benefits:

- No need to spend a lot of money upfront for hardware

- No need to upgrade your hardware and migrate your data and services every few years

- Ability to scale to adjust to the demand, paying only for the resources you consume

- Create proof of concepts quickly since provisioning resources can be done very fast

- Secure and manage your APIs

- Not just infrastructure: data analytics and machine learning services are available in GCP

GCP makes it easy to experiment and use the resources you need in an economical way.

How to optimize your VMs to reduce costs in GCP

In general, you will only be charged for the time your instances are running. Google will not charge you for stopped instances. However, if they consume resources, like disks or reserved IPs, you might incur charges.

Here are some ways you can optimize the cost of running your applications in GCP.

Custom Machine Types

GCP provides different machine families with predefined amounts of RAM and CPUs:

- General-purpose. Offers the best price-performance ratio for a variety of workloads.

- Memory-optimized. Ideal for memory-intensive workloads. They offer more memory per core than other machine types.

- Compute-optimized. They offer the highest performance per core and and are optimized for compute-intensive workloads

- Shared-core. These machine types timeshare a physical core. This can be a cost-effective method for running small applications.

Besides, you can create your custom machine with the amount of RAM and CPUs you need.

Preemptible VM’s

You can use preemptible virtual machines to save up to 80% of your costs. They are ideal for fault-tolerant, non-critical applications. You can save the progress of your job in a persistent disk using a shut-down script to continue where you left off.

Google may stop your instances at any time (with a 30-second warning) and will always stop them after 24 hours.

To reduce the chances of getting your VMs shut down, Google recommends:

- Using many small instances and

- Running your jobs during off-peak times.

Note: Start-up and shut-down scripts apply to non-preemptible VMS as well. You can use them the control the behavior of your machine when it starts or stops. For instance, to install software, download data, or backup logs.

There are two options to define these scripts:

- When you are creating your instance in the Google Console, there is a field to paste your code.

- Using the metadata server URL to point your instance to a script stored in Google Cloud Storage.

This latter is preferred because it is easier to create many instances and to manage the script.

Sustained Use Discounts

The longer you use your virtual machines (and Cloud SQL instances), the higher the discount - up to 30%. Google does this automatically for you.

Committed Use Discounts

You can get up to 57% discount if you commit to a certain amount of CPU and RAM resources for a period of 1 to 3 years.

To estimate your costs, use the Price Calculator. This helps prevent any surprises with your bills and create budget alerts.

How to manage resources in GCP

In this section, I will explain how you can manage and administer your Google Cloud resources.

Resource Hierarchy

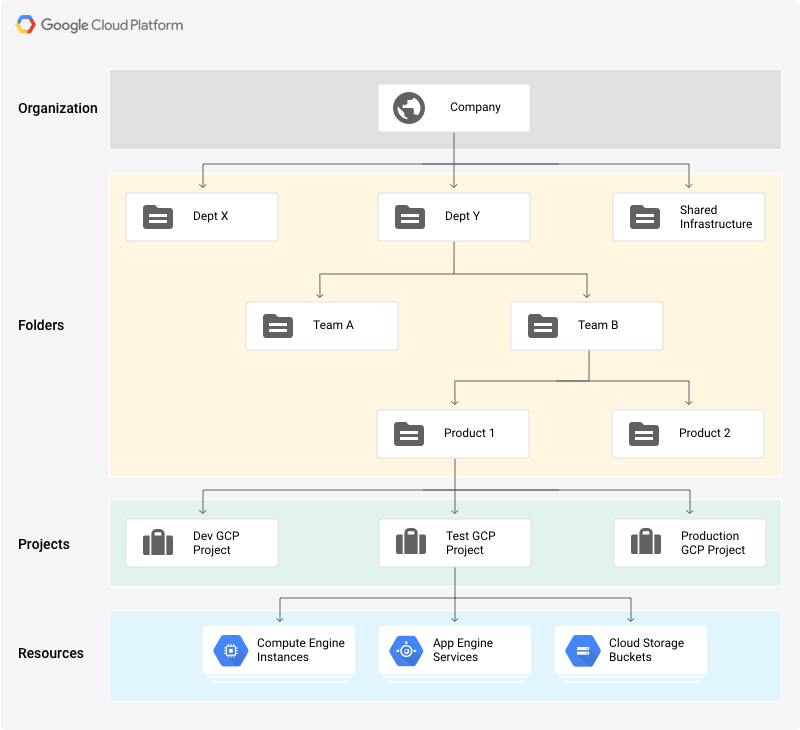

There are four types of resources that can be managed through Resource Manager:

- The organization resource. It is the root node in the resource hierarchy. It represents an organization, for example, a company.

- The projects resource. For example, to separate projects for production and development environments. They are required to create resources.

- The folder resource. They provide an extra level of project isolation. For example, creating a folder for each department in a company.

- Resources. Virtual machines, database instances, load balancers, and so on.

There are quotas that limit the maximum number of resources you can create to prevent unexpected spikes in billing. However, most quotas can be increased by opening a support ticket.

Resources in GCP follow a hierarchy via a parent/child relationship, similar to a traditional file system, where:

- Permissions are inherited as we descend the hierarchy. For example, permissions granted and the organization level will be propagated to all the folders and projects.

- More permissive parent policies always overrule more restrictive child policies.

This hierarchical organization helps you manage common aspects of your resources, such as access control and configuration settings.

You can create super admin accounts that have access to every resource in your organization. Since they are very powerful, make sure you follow Google’s best practices.

Labels

Labels are key-value pairs you can use to organize your resources in GCP. Once you attach a label to a resource (for instance, to a virtual machine), you can filter based on that label. This is useful also to break down your bills by labels.

Some common use cases:

- Environments: prod, test, and so on.

- Team or product owners

- Components: backend, frontend, and so on.

- Resource state: active, archive, and so on.

Labels vs Network tags

These two similar concepts seem to generate some confusion. I have summarized the differences in this table:

Labels Network tags Applied to any GCP resource Applied only for VPC resources Just organize resources Affect how resources work (ex: through application of firewall rules) Cloud IAM

Simply put, Cloud IAM controls who can do what on which resource. A resource can be a virtual machine, a database instance, a user, and so on.

It is important to notice that permissions are not directly assigned to users. Instead, they are bundled into roles, which are assigned to members. A policy is a collection of one or more bindings of a set of members to a role.

Identities

In a GCP project, identities are represented by Google accounts, created outside of GCP, and defined by an email address (not necessarily @gmail.com). There are different types:

- Google accounts*. To represent people: engineers, administrators, and so on.

- Service accounts. Used to identify non-human users: applications, services, virtual machines, and others. The authentication process is defined by account keys, which can be managed by Google or by users (only for user-created service accounts).

- Google Groups are a collection of Google and service accounts.

- G Suite Domain* is the type of account you can use to identify organizations. If your organization is already using Active Directory, it can be synchronized with Cloud IAM using Cloud Identity.

- allAuthenticatedUsers. To represent any authenticated user in GCP.

- allUsers. To represent anyone, authenticated or not.

Regarding service accounts, some of Google’s best practices include:

- Not using the default service account

- Applying the Principle of Least Privilege. For instance:

- 1. Restrict who can act as a service account

- 2. Grant only the minimum set of permissions that the account needs

- 3. Create service accounts for each service, only with the permissions the account needs

Roles

A role is a collection of permissions. There are three types of roles:

- Primitive. Original GCP roles that apply to the entire project. There are three concentric roles: Viewer, Editor, and Owner. Editor contains Viewer and Owner contains Editor.

- Predefined. Provides access to specific services, for example, storage.admin.

- Custom. lets you create your own roles, combining the specific permissions you need.

When assigning roles, follow the principle of least privilege, too. In general, prefer predefined over primitive roles.

Cloud Deployment Manager

Cloud Deployment Manager automates repeatable tasks like provisioning, configuration, and deployments for any number of machines.

It is Google’s Infrastructure as Code service, similar to Terraform - although you can deploy only GCP resources. It is used by GCP Marketplace to create pre-configured deployments.

You define your configuration in YAML files, listing the resources (created through API calls) you want to create and their properties. Resources are defined by their name (VM-1, disk-1), type (compute.v1.disk, compute.v1.instance) and properties (zone:europe-west4, boot:false).

To increase performance, resources are deployed in parallel. Therefore you need to specify any dependencies using references. For instance, do not create virtual machine VM-1 until the persistent disk disk-1 has been created. In contrast, Terraform would figure out the dependencies on its own.

You can modularize your configuration files using templates so that they can be independently updated and shared. Templates can be defined in Python or Jinja2. The contents of your templates will be inlined in the configuration file that references them.

Deployment Manager will create a manifest containing your original configuration, any templates you have imported, and the expanded list of all the resources you want to create.

Cloud Operations (formerly Stackdriver)

Operations provide a set of tools for monitoring, logging, debugging, error reporting, profiling, and tracing of resources in GCP (AWS and even on-premise).

Cloud Logging

Cloud Logging is GCP’s centralized solution for real-time log management. For each of your projects, it allows you to store, search, analyze, monitor, and alert on logging data:

- By default, data will be stored for a certain period of time. The retention period varies depending on the type of log. You cannot retrieve logs after they have passed this retention period.

- Logs can be exported for different purposes. To do this, you create a sink, which is composed of a filter (to select what you want to log) and a destination: Google Cloud Storage (GCS) for long term retention, BigQuery for analytics, or Pub/Sub to stream it into other applications.

- You can create log-based metrics in Cloud Monitoring and even get alerted when something goes wrong.

Logs are a named collection of log entries. Log entries record status or events and includes the name of its log, for example, compute.googleapis.com/activity. There are two main types of logs:

First, User Logs:

- These are generated by your applications and services.

- They are written to Cloud Logging using the Cloud Logging API, client libraries, or logging agents installed on your virtual machines.

- They stream logs from common third-party applications like MySQL, MongoDB, or Tomcat.

Second, Security logs, divided into:

- Audit logs, for administrative changes, system events, and data access to your resources. For example, who created a particular database instance or to log a live migration. Data access logs must be explicitly enabled and may incur additional charges. The rest are enabled by default, cannot be disabled, and are free of charges.

- Access Transparency logs, for actions taken by Google staff when they access your resources for example to investigate an issue you reported to the support team.

VPC Flow Logs

They are specific to VPC networks (which I will introduce later). VPC flow logs record a sample of network flows sent from and received by VM instances, which can be later access in Cloud Logging.

They can be used to monitor network performance, usage, forensics, real-time security analysis, and expense optimization.

Note: you may want to log your billing data for analysis. In this case, you do not create a sink. You can directly export your reports to BigQuery.

Cloud Monitoring

Cloud Monitoring lets you monitor the performance of your applications and infrastructure, visualize it in dashboards, create uptime checks to detect resources that are down and alert you based on these checks so that you can fix problems in your environment. You can monitor resources in GCP, AWS, and even on-premise.

It is recommended to create a separate project for Cloud Monitoring since it can keep track of resources across multiple projects.

Also, it is recommended to install a monitoring agent in your virtual machines to send application metrics (including many third-party applications) to Cloud Monitoring. Otherwise, Cloud Monitoring will only display CPU, disk traffic, network traffic, and uptime metrics.

Alerts

To receive alerts, you must declare an alerting policy. An alerting policy defines the conditions under which a service is considered unhealthy. When the conditions are met, a new incident will be created and notifications will be sent (via email, Slack, SMS, PagerDuty, etc).

A policy belongs to an individual workspace, which can contain a maximum of 500 policies.

Trace

Trace helps find bottlenecks in your services. You can use this service to figure out how long it takes to handle a request, which microservice takes the longest to respond, where to focus to reduce the overall latency, and so on.

It is enabled by default for applications running on Google App Engine (GAE) - Standard environment - but can be used for applications running on GCE, GKE, and Google App Engine Flexible.

Error Reporting

Error Reporting will aggregate and display errors produced in services written in Go, Java, Node.js, PHP, Python, Ruby, or .NET. running on GCE, GKE, GAP, Cloud Functions, or Cloud Run.

Debug

Debug lets you inspect the application’s state without stopping your service. Currently supported for Java, Go, Node.js and Python. It is automatically integrated with GAE but can be used on GCE, GKE, and Cloud Run.

Profile

Profiler that continuously gathers CPU usage and memory-allocation information from your applications. To use it, you need to install a profiling agent.

#cloud #programming #web-development #developer