In a recent conference on Responsible AI for Social Empowerment (RAISE), held in India, the topic of discussion was on explainable AI. Explainable AI is a critical element of the broader discipline of responsible AI. Responsible AI encompasses ethics, regulations, and governance across a range of risks and issues related to AI including bias, transparency, explicability, interpretability, robustness, safety, security, and privacy.

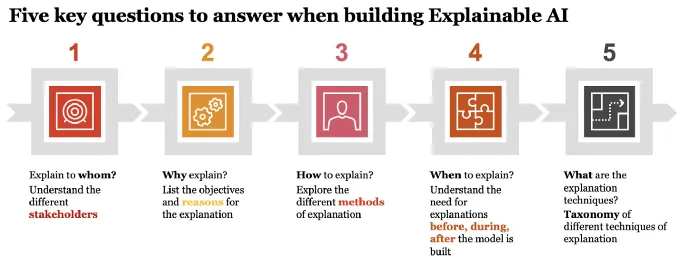

Interpretability and explainability are closely related topics. Interpretability is at the model level with an objective of understanding the decisions or predictions of the overall model. Explainability is at an individual instance of the model with the objective of understanding why the specific decision or prediction was made by the model. When it comes to explainable AI we need to consider five key questions — Whom to explain? Why explain? When to explain? How to explain? What is the explanation?

#data-science #artificial-intelligence #responsible-ai #risk #explainable-ai