Terminology of a specific domain is often difficult to start with. With a software engineering background, machine learning has many such terms that I find I need to remember to use the tools and read the articles.

Some basic terms are Precision, Recall, and F1-Score. These relate to getting a finer-grained idea of how well a classifier is doing, as opposed to just looking at overall accuracy. Writing an explanation forces me to think it through, and helps me remember the topic myself. That’s why I like to write these articles.

I am looking at a binary classifier in this article. The same concepts do apply more broadly, just require a bit more consideration on multi-class problems. But that is something to consider another time.

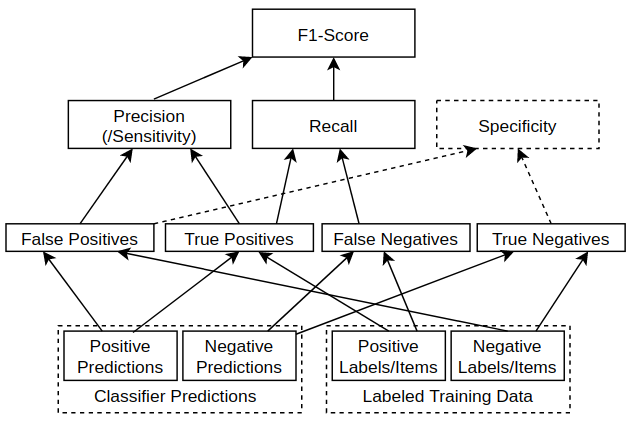

Before going into the details, an overview figure is always nice:

Hierarchy of Metrics from raw measurements / labeled data to F1-Score. Image by Author.

On the first look, it is a bit of a messy web. No need to worry about the details for now, but we can look back at this during the following sections when explaining the details from the bottom up. The metrics form a hierarchy starting with the the _true/false negatives/positives _(at the bottom), and building up all the way to the _F1-score _to bind them all together. Lets build up from there.

True/False Positives and Negatives

A binary classifier can be viewed as classifying instances as positive or negative:

- Positive: The instance is classified as a member of the class the classifier is trying to identify. For example, a classifier looking for cat photos would classify photos with cats as positive (when correct).

- Negative: The instance is classified as not being a member of the class we are trying to identify. For example, a classifier looking for cat photos should classify photos with dogs (and no cats) as negative.

#recall #f1-score #precision #data-science