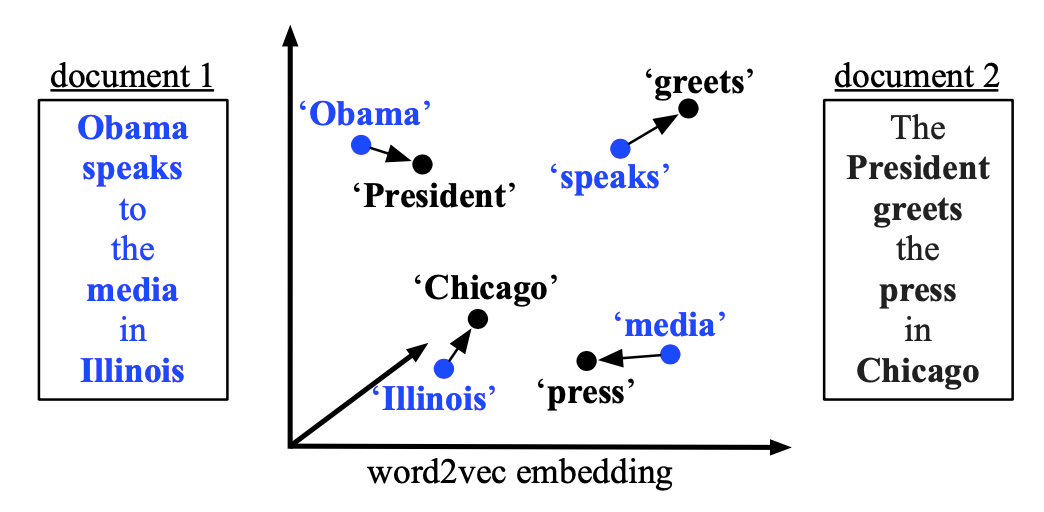

Document classification and document retrieval have been showing a wide range of applications. An essential part of document classification is to generate document representations properly. Matt J. Kusner et al., in 2015, presented Word Mover’s Distance (WMD) [1], where word embeddings are incorporated in computing the distance between two documents. With given pre-trained word embeddings, the dissimilarities between documents can be measured with semantical meanings by computing “the minimum amount of distance that the embedded words of one document need to ‘travel’ to reach the embedded words of another”.

In the following sections, we will be discussing the principle of WMD, the constraints and approximations, prefetch and prune of WMD, the performance of WMD.

#natural-language-process #nlp #naturallanguageprocessing #machine-learning #word-embeddings