Web scraping has been used to extract data from websites almost from the time the World Wide Web was born. In the early days, scraping was mainly done on static pages — those with known elements, tags, and data.

More recently, however, advanced technologies in web development have made the task a bit more difficult. In this article, we’ll explore how we might go about scraping data in the case that new technology and other factors prevent standard scraping.

Traditional Data Scraping

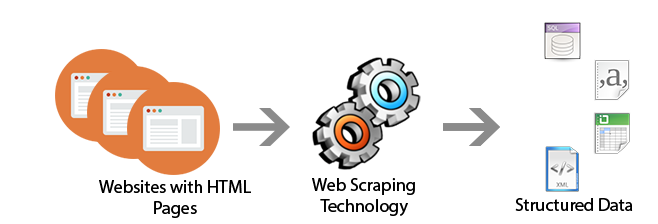

As most websites produce pages meant for human readability rather than automated reading, web scraping mainly consisted of programmatically digesting a web page’s mark-up data (think right-click, View Source), then detecting static patterns in that data that would allow the program to “read” various pieces of information and save it to a file or a database.

Courtesy of the author.

If report data were to be found, often, the data would be accessible by passing either form variables or parameters with the URL. For example:

https://www.myreportdata.com?month=12&year=2004&clientid=24823

Python has become one of the most popular web scraping languages due in part to the various web libraries that have been created for it. One popular library, Beautiful Soup, is designed to pull data out of HTML and XML files by allowing searching, navigating, and modifying tags (i.e., the parse tree).

Browser-based Scraping

Recently, I had a scraping project that seemed pretty straightforward and I was fully prepared to use traditional scraping to handle it. But as I got further into it, I found obstacles that could not be overcome with traditional methods.

Three main issues prevented me from my standard scraping methods:

- Certificate. There was a certificate required to be installed to access the portion of the website where the data was. When accessing the initial page, a prompt appeared asking me to select the proper certificate of those installed on my computer, and click OK.

- Iframes. The site used iframes, which messed up my normal scraping. Yes, I could try to find all iframe URLs, then build a sitemap, but that seemed like it could get unwieldy.

- JavaScript. The data was accessed after filling in a form with parameters (e.g., customer ID, date range, etc.). Normally, I would bypass the form and simply pass the form variables (via URL or as hidden form variables) to the result page and see the results. But in this case, the form contained JavaScript, which didn’t allow me to access the form variables in a normal fashion.

#selenium #crawling #scraping-with-python #python #web-scraping