Has anyone ever wondered looking at someone and tried to analyze what kind of emotion they had or what kind of gesture they were trying to perform but you ended up being confused. Maybe once you tried to approach a baby which looked like this:

You thought it likes you and just wants a cuddle and then you ended up carrying it and then this happened!

Oops! That did not work out as planned. But real-life uses may not be as simple as the above situation and may require more precise human emotion analysis as well as gesture analysis. This field of application is especially useful in any department where customer satisfaction or just knowing what the customer wants is extremely important.

Today we will be uncovering a couple of Deep Learning models which does exactly that. The models we will be developing today can identify some human emotions as well as a few gestures. We will be trying to identify 6 emotions namely angry, happy, neutral, fear, sad and surprise. We will also be identifying 4 types of gestures which are loser, victory, super, and punch. We will be performing a real-time performance and we will be getting a real-time vocal response from the model.

The emotions model will be built using convolution neural networks from scratch and for finger gestures, I will be using transfer learning with VGG-16 architecture and adding custom layers to improve the performance of the model to get better and higher accuracy. The emotion analysis and finger gestures will provide an appropriate vocal as well as text response for each of the actions. The metric we will be using is accuracy and we will try to achieve a validation accuracy of at least 50% for the emotions model-1, over 65% for emotions model-2, and a validation accuracy of over 90% for the gestures model.

Datasets:

Let us now look at the dataset choices we have available to us.

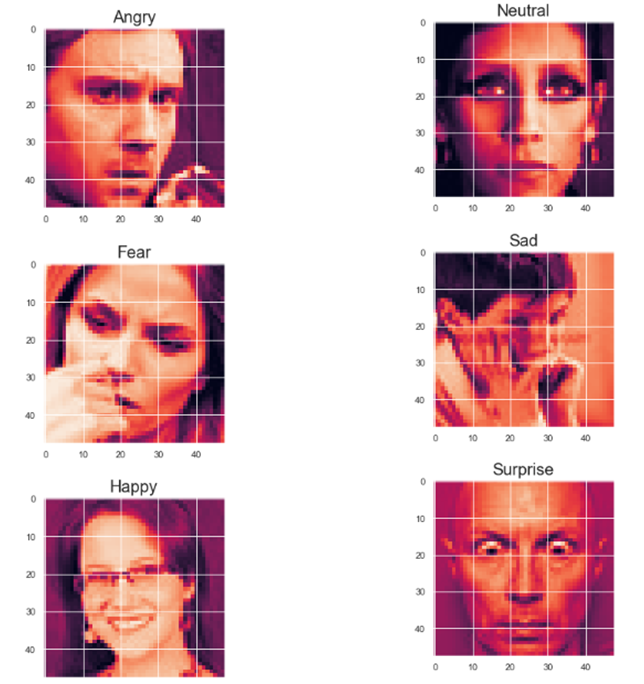

1. Kaggle’s fer2013 dataset — The dataset is an open-source dataset that contains 35,887 grayscale images of various emotions which are all labeled and are of size 48x48. The Facial Expression Recognition Dataset was published during the International Conference on Machine Learning (ICML). This Kaggle dataset will be the more primary and important dataset that will be used for emotion analysis in this case study.

The dataset is given in an excel sheet in .csv format and the pixels are to be extracted and after extraction of the pixels and pre-processing of data, the dataset looks like the image posted below:

#deep-learning #artificial-intelligence #machine-learning #emotion-gesture-detection #human-emotions #deep learning