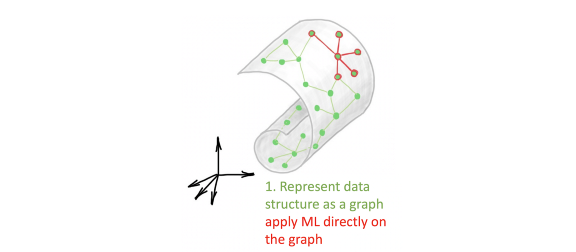

TL;DR:_ Graph neural networks exploit relational inductive biases for data that come in the form of a graph. However, in many cases we do not have the graph readily available. Can graph deep learning still be applied in this case? In this post, I draw parallels between recent works on latent graph learning and older techniques of manifold learning._

The past few years have witnessed a surge of interest in developing ML methods for graph-structured data. Such data naturally arises in many applications such as social sciences (e.g. the Follow graph of users on Twitter or Facebook), chemistry (where molecules can be modelled as graphs of atoms connected by bonds), or biology (where interactions between different biomolecules are often modelled as a graph referred to as the interactome). Graph neural networks (GNNs), which I have covered extensively in my previous posts, are a particularly popular method of learning on such graphs by means of local operations with shared parameters exchanging information between adjacent nodes.

#ai & machine learning #manifold learning #machine-learning