CPUs per node

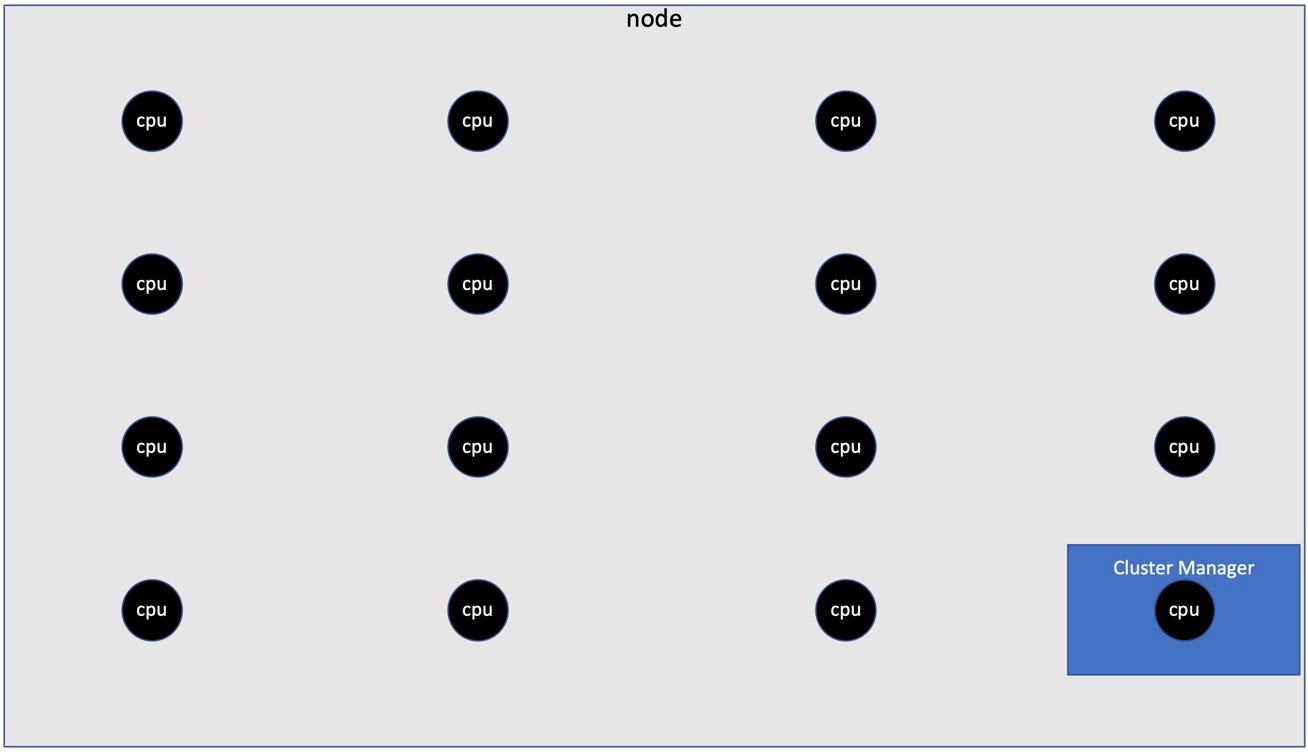

The first step to determine an efficient executor config is to figure out how many actual CPUs (i.e. not virtual CPUs) are available on the nodes in your cluster. To do so, you need to find out what type of EC2 instance your cluster is using. For our discussion here, we’ll be using r5.4xlarge which according to the AWS EC2 Instance Pricing page has 16 CPUs.

When we submit our jobs, we need to reserve one CPU for the operating system and the Cluster Manager. So we don’t want to use all 16 CPUs for the job, instead we want to allocate 15 available CPUs to use on each node during Spark processing.

CPUs per executor

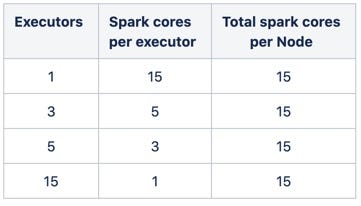

Now that we know how many CPUs are available to use on each node, we need to determine how many Spark cores we want to assign to each executor. From basic math (5 x 3= 15), we can see that there are four different executor & core combinations that can get us to 15 Spark cores per node:

Possible configurations for executor

Lets explore the feasibility of each of these configurations.

#aws #efficiency #software-engineering #cost #apache-spark