For the past three months, I have been exploring the latest techniques in Artificial Intelligence (AI) and Machine Learning (ML) to create abstract art. During my investigation, I learned that three things are needed to create abstract paintings: (A) source images, (B) an ML model, and © a lot of time to train the model on a high-end GPU. Before I discuss my work, let’s take a look at some prior research.

Background

Artificial Neural Networks

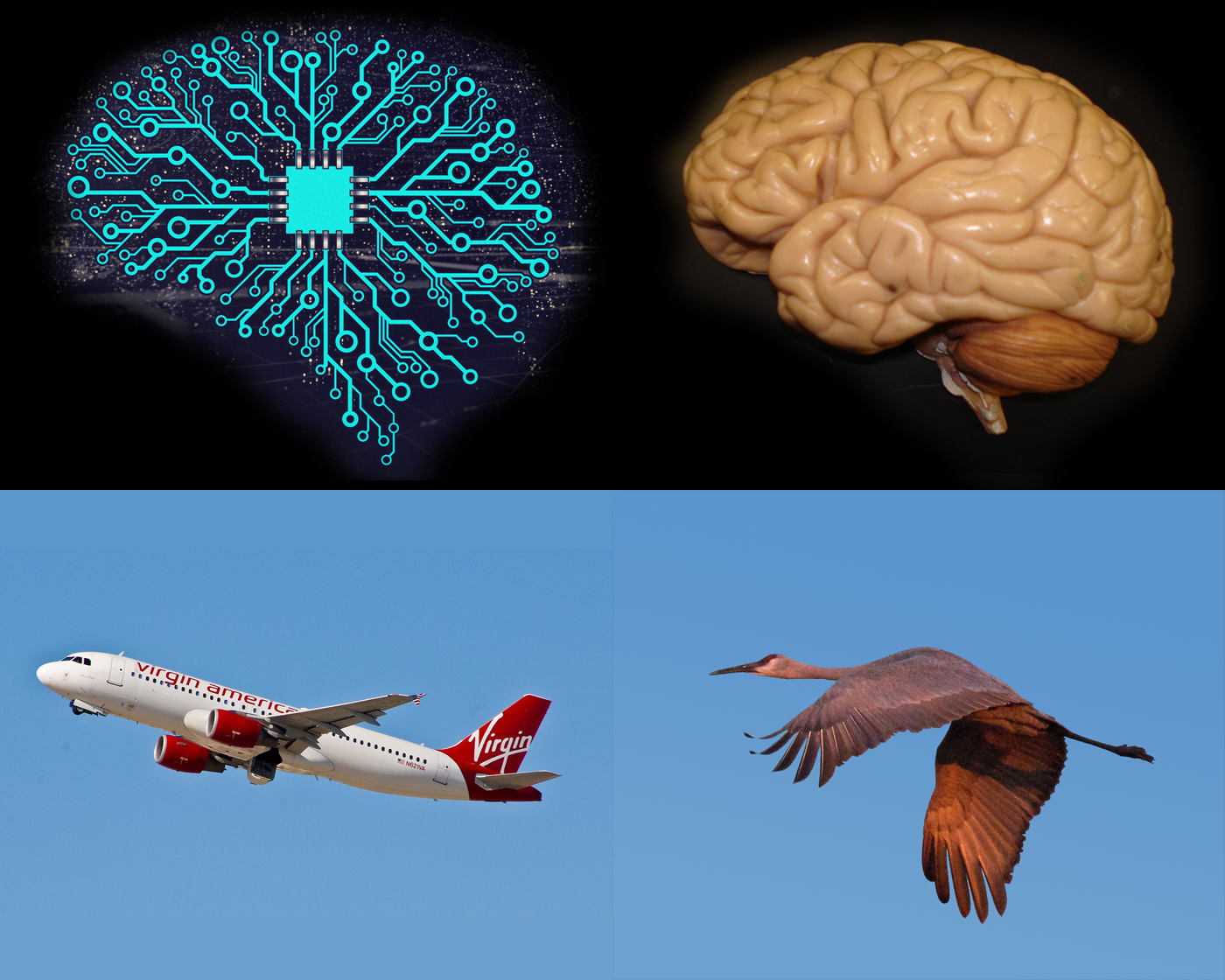

Warren McCulloch and Walter Pitts created a computational model for Neural Networks (NNs) back in 1943[1]. Their work led to research of both the biological processing in brains and the use of NNs for AI. Richard Nagyfi discusses the differences between Artificial Neural Networks (ANNs) and biological brains in this post. He describes an apt analogy that I will summarize here: ANNs are to brains as planes are to birds. Although the development of these technologies was inspired by biology, the actual implementations are very different!

Visual Analogy Neural Network chip artwork by mikemacmarketin CC BY 2.0, Brain model by biologycorner CC BY-NC 2.0, Plane photo by Moto@Club4AG CC BY 2.0, Bird photo by ksblack99 CC PDM 1.0

Both ANNs and biological brains learn from external stimuli to understand things and predict outcomes. One of the key differences is that ANNs work with floating-point numbers and not just binary firing of neurons. With ANNs it’s numbers in and numbers out.

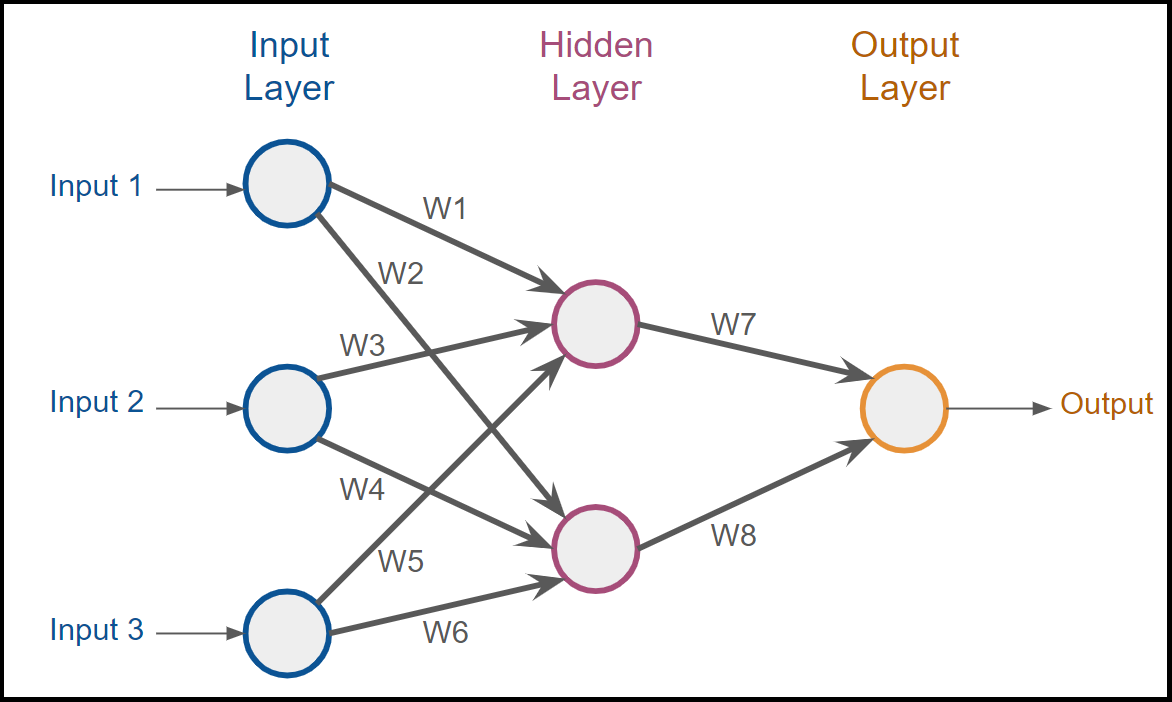

The diagram below shows the structure of a typical ANN. The inputs on the left are the numerical values that contain the incoming stimuli. The input layer is connected to one or more hidden layers that contain the memory of prior learning. The output layer, in this case just one number, is connected to each of the nodes in the hidden layer.

Diagram of a Typical ANN

Each of the internal arrows represents numerical weights that are used as multipliers to modify the numbers in the layers as they get processed in the network from left to right. The system is trained with a dataset of input values and expected output values. The weights are initially set to random values. For the training process, the system runs through the training set multiple times, adjusting the weights to achieve the expected outputs. Eventually, the system will not only predict the outputs correctly from the training set, but it will also be able to predict outputs for unseen input values. This is the essence of Machine Learning (ML). The intelligence is in the weights. A more detailed discussion of the training process for ANNs can be found in Conor McDonald’s post, here.

Generative Adversarial Networks

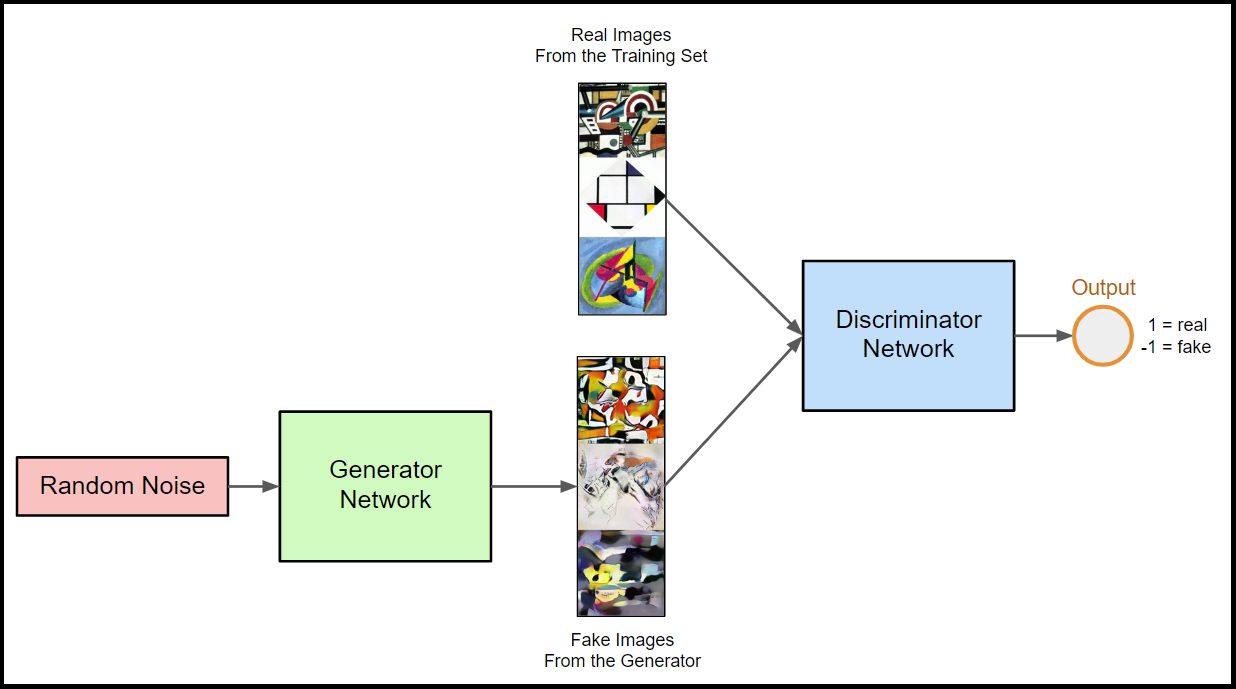

In 2014, Ian Goodfellow and seven coauthors at the Université de Montréal presented a paper on Generative Adversarial Networks (GANs)[2].** They came up with a way to train two ANNs that effectively compete with each other to create content like photos, songs, prose, and yes, paintings.** The first ANN is called the Generator and the second is called the Discriminator. The Generator is trying to create realistic output, in this case, a color painting. The Discriminator is trying to discern real paintings from the training set as opposed to fake paintings from the generator. Here’s what a GAN architecture looks like.

Generative Adversarial Network

A series of random noise is fed into the Generator, which then uses its trained weights to generate the resultant output, in this case, a color image. The Discriminator is trained by alternating between processing real paintings, with an expected output of 1 and fake paintings, with an expected output of -1. After each painting is sent to the Discriminator, it sends back detailed feedback about why the painting is not real, and the Generator adjusts its weights with this new knowledge to try and do better the next time. The two networks in the GAN are effectively trained together in an adversarial fashion. The Generator gets better at trying to pass off a fake image as real, and the Discriminator gets better at determining which input is real, and which is fake. Eventually, the Generator gets pretty good at generating realistic-looking images. You can read more about GANs, and the math they use, in Shweta Goyal’s post here.

#abstract-art #python #artificial-intelligence #machine-learning #generative-adversarial