History

This article is about an introduction to SVMs, understanding the mathematical intuition, Regularization, implementing the concept in code, and then knowing the fields of its applications. Right then tighten your seat belt and let’s enter this wonderland of beautiful concept Support Vector Machines (SVM). Vladimir Vapnik one of the pioneers in the Machine Learning world had discovered the SVMs in the early 1960s. But he was unable to prove the magic of this Algorithm computationally as he was lacking the needed resources back then in the 1960s. It was in the early 1990s that the Bell Laboratory invited him to come forward to the US related to the research work. Back then in 1993, there was quite a fast track research going on in the character digit recognition area. Vapnik bet that his work of SVMs could do much better than the neural nets in digit recognition. So one of my colleagues tried to apply the SVM and it worked wonders to his surprise. So finally the work which was discovered in the 1960s got recognition in the 1990s when it was actually implemented to give better computational results.

Introduction

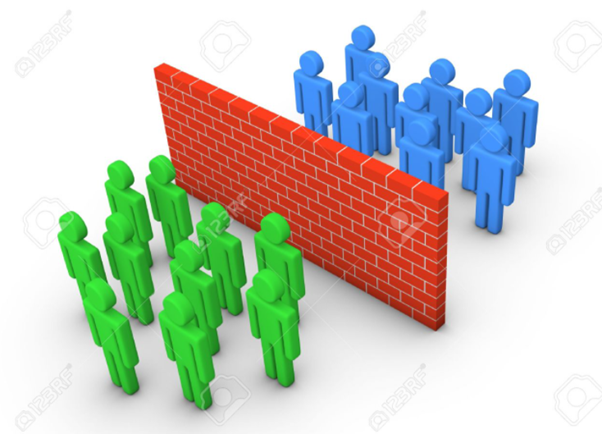

Support Vector Machines is a supervised machine learning algorithm used in Classification and Regression modeling. The purpose of SVMs is to identify a sample in space and segregate it based on the classes. For example, if I give you images of fruits as a list then the algorithm classifies the input images that are recognized as Apple or Strawberry. Remember in the above example the classification was based on the assumption that the classes are easily separable. What if the images were distributed in such a way that its difficult to split the classes by drawing a straight line? Before we analyze in that direction we need to familiarize a few basics.

#machine-learning #supervised-learning #support-vector-machine #svm