A popular algorithm that is capable of performing linear or non-linear classification and regression, Support Vector Machines were the talk of the town before the rise of deep learning due to the exciting kernel trick — If the terminology makes no sense to you right now don’t worry about it. By the end of this post you’ll have an good understanding about the intuition of SVMs, what is happening under the hood of linear SVMs, and how to implement one in Python.

To see the full Algorithms from Scratch Series click on the link below.

Algorithms From Scratch - Towards Data Science

Intuition

In classification problems the objective of the SVM is to fit the largest possible margin between the 2 classes. On the contrary, regression task flips the objective of classification task and attempts to fit as many instances as possible within the margin — We will first focus on classification.

If we focus solely on the extremes of the data (the observations that are on the edges of the cluster) and we define a threshold to be the mid-point between the two extremes, we are left with a margin that we use to sepereate the two classes — this is often referred to as a hyperplane. When we apply a threshold that gives us the largest margin (meaning that we are strict to ensure that no instances land within the margin) to make classifications this is called Hard Margin Classification (some text refer to this as Maximal Margin Classification).

When detailing hard margin classification it always helps to see what is happening visually, hence Figure 2 is an example of a hard margin classification. To do this we will use the iris dataset from scikit-learn and utility function plot_svm() which you can find when you access the full code on github — link below.

kurtispykes/ml-from-scratch

Note: This story was written straight from jupyter notebooks using python package

_jupyter_to_medium_— for more information on this package click here— and the committed version on github is a first draft hence you may notice some alterations to this post.

import pandas as pd

import numpy as np

from sklearn.svm import LinearSVC

from sklearn.preprocessing import StandardScaler

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

%matplotlib inline

# store the data

iris = load_iris()

# convert to DataFrame

df = pd.DataFrame(data=iris.data,

columns= iris.feature_names)

# store mapping of targets and target names

target_dict = dict(zip(set(iris.target), iris.target_names))

# add the target labels and the feature names

df["target"] = iris.target

df["target_names"] = df.target.map(target_dict)

# view the data

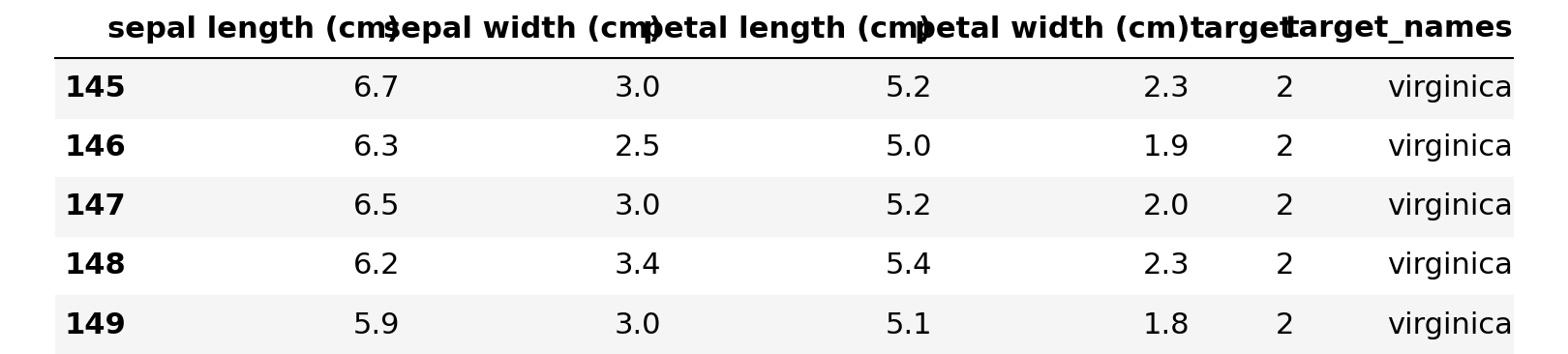

df.tail()

Figure 1: Original Dataset

# setting X and y

X = df.query("target_names == 'setosa' or target_names == 'versicolor'").loc[:, "petal length (cm)":"petal width (cm)"]

y = df.query("target_names == 'setosa' or target_names == 'versicolor'").loc[:, "target"]

# fit the model with hard margin (Large C parameter)

svc = LinearSVC(loss="hinge", C=1000)

svc.fit(X, y)

plot_svm()

#programming #machine-learning #data-science #artificial-intelligence #algorithms-from-scratch #algorithms