This tutorial is split into 3 Parts:

- Introduction

- Cloud Infrastructure Setup

- Machine Learning

All the supporting code for this project can be found on GitHub.

Part I: Introduction

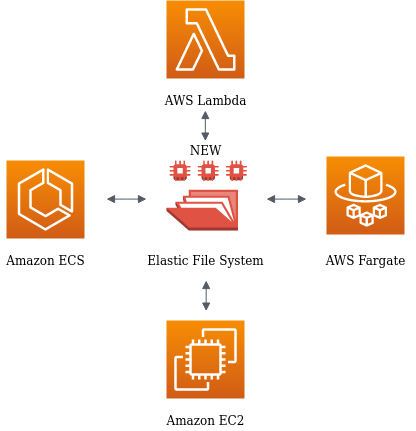

On June 16th, 2020, AWS released the long-awaited feature of AWS Elastic File System (EFS) support for their AWS Lambda service.

It is a huge leap forward in serverless computing, enabling a whole set of new use cases that could have a massive impact on AI infrastructure.

With Lambda & EFS combined, compute and storage can converge while remaining independently scalable side by side.

This opens up a whole new world of serverless solutions that were previously impossible.

Instead of responding to event-based triggers, Lambda can now work on the file system shared with other compute services such as EC2, or container-based services such as ECS and Fargate.

Serverless Data Processing and Machine Learning

EFS support opens up many opportunities for data processing by allowing Lambda to work with files and directories directly without having to go through the overhead of coordinating S3 access_._

Once the data has been cleaned, processed and transformed, it is important to train Machine Learning (ML) models to infer predictions. Hosting and serving ML Models for inference can also become quite expensive with dedicated machines. It was possible to serve models in Lambda but involved either downloading from S3 or adding the model into Lambda layers.

#amazon-sagemaker #serverless #aws #machine-learning #aws-lambda