Shouldn’t someone who worked 10+ years in the Java SE team at Sun Microsystems bleed Java bytecodes and be instantiating abstract interfaces until the last breath? For this former Java SE team member, learning the Node.js platform in 2011 was a breath of fresh air. After being laid off from Sun in January 2009 (just prior to the Oracle buyout), I learned about and became hooked on Node.js.

Just how hooked? Since 2010, I have written extensively about Node.js programming. Namely, four editions of Node.js Web Development, plus other books and numerous tutorial blog posts on Node.js programming. That’s a LOT of time explaining Node.js and advancements in the JavaScript language.

While working for Sun Microsystems I believed in All Things Java. I presented sessions at JavaONE, co-developed the java.awt.Robot class, ran the Mustang Regressions Contest (the bug finding contest for the Java 1.6 release), helped to launch the “Distributions License for Java” that was the pre-OpenJDK answer for Linux distributions to distribute JDK builds, and later played a small role in launching the OpenJDK project. Along the way I landed a blog on java.net (a now-defunct website), writing 1–2 times a week for about 6 years discussing events in the Java ecosystem. A big topic was defending Java against those predicting Java’s death.

The Duke Award was handed out to employees who went above and beyond. I earned this after running the Mustang Regressions Contest, the bug-finding contest coinciding with the Java 1.6 release.

What happened to living and breathing Java byte-codes? What I’m here for is explaining how this died-in-the-wool Java advocate became a died-in-the-wool Node.js/JavaScript advocate.

It’s not like I’ve completely divorced myself of Java. I have written a significant amount of Java/Spring/Hibernate code in the last 3 years. While I fully enjoyed the work — I worked in the Solar Industry doing deeply soul-fulfilling things like writing database queries about kiloWatt-hours — coding in Java has lost its lustre.

Two years of Spring coding taught a very clear lesson: papering over complexity does not produce simplicity, it only produces more complexity.

TL;DR

- Java is full of boilerplate code which obscures the programmers intention

- Spring & Spring Boot teach a lesson: papering over complexity creates more complexity

- Java EE was a “design by committee” project that covers everything of everything in Enterprise application development, hence is exceedingly complex

- The experience of Spring programming is it’s great until it isn’t, until that day an obscure impossible-to-understand Exception appears out of the depths of a subsystem you’ve never heard of requiring 3+ days just to figure out the problem

- What is the overhead required in the framework to allow coders to write zero code?

- While IDE’s like Eclipse are powerful, they are a symptom of Java’s complexity

- Node.js was the result of one guy honing and refining a vision of a lightweight event driven architecture, until Node.js revealed itself

- The JavaScript community seems to appreciate removing boilerplate allowing the programmers intention to shine

- The solution for Callback Hell, the async/await function, is an example of removing boilerplate so the programmers intention shines

- Coding with Node.js is a joy

- JavaScript lacks the strict type checking of Java, which is a blessing and a curse. Code is easier to write but requires more testing to ensure correctness

- The npm/yarn package management system is excellent and joyful to use, versus the abomination that is Maven

- Both Java and Node.js offer excellent performance, running counter to the myth that JavaScript is slow and therefore Node.js performance must be bad

- Node.js performance is riding the coattails of Google investment in V8 to speed up the Chrome browser

- The intense competition between browsers is making JavaScript more and more powerful every year, benefitting Node.js

Java has become a burden, coding with Node.js is joy-filled

Some tools or objects are the result of a designer spending years honing and refining the thing. They try different ideas, they remove unnecessary attributes, and they end up with an object with just the right attributes for the purpose. Often these objects have a kind of powerful simplicity that is very appealing. Java is not that kind of system.

Spring is a popular framework for developing Java-based web applications. The core purpose for Spring, and especially Spring Boot, is an easy to use preconfigured Java EE stack. The Spring programmer doesn’t have to hook up all the servlets, data persistence, application servers, and who knows what else, to get a complete system. Instead Spring takes care of all those details, while you focus on coding. For example, the JPA Repository class synthesizes database queries for methods with names like “findUserByFirstName” — you don’t write any code, simply add methods named this way to the Repository definition and Spring will handle the rest.

It’s a great story, and a nice experience, until it isn’t.

What’s it mean when you get a Hibernate PersistentObjectException about a “detached entity passed to persist”? That took several days to figure out — at the risk of oversimplification — it meant the JSON arriving at the REST endpoint had ID fields with values. Hibernate, oversimplying, wants control of the ID values, and throws this confusing exception. There are thousands of equally confusing and obtuse exception messages. With subsystem after subsystem in the Spring stack it’s like a nemesis sitting in wait for your tiniest mistake, and then it pounces on you with an application-crashing exception.

Then, there is the huge stack traces. They go on for several screens full of abstract method this and abstract method that. Spring is obviously working out the configuration required to implement what the code says. This level of abstraction obviously requires quite a bit of logic to find everything and to execute the requests. A long stack trace isn’t necessarily bad. Instead it points to a symptom: Just what is the memory/performance overhead cost?

How would “findUserByFirstName” execute when the programmer writes zero code? The framework has to parse the method name, guess the programmers intention, construct something like an abstract syntax tree, generate some SQL, etc.. Just what is the overhead for all that? Just so the coder doesn’t have to code?

After a few dozen times going through this, spending weeks learning arcana you should never have had to learn, you might arrive to the same conclusion I landed in: papering over complexity does not produce simplicity, it only produces more complexity.

The point goes to Node.js

“Compatibility Matters” was a very cool slogan, meaning that the Java Platform’s key value proposition was complete backwards compatibility. We took this seriously as well as plastered it on T-Shirts like this. Of course this degree of maintaining compatibility can be an albatross, and it’s useful at times to shirk off old ways of doing things that are no longer useful.

Node.js on the other hand …

Where Spring and Java EE is exceedingly complex, Node.js is a breath of fresh air. First is the design esthetic Ryan Dahl used in developing the core Node.js platform. Dahl’s experience was that using threads made for a heavyweight complex system. He sought something different, and spent a couple years honing and refining a set of core ideals arriving at Node.js. The result was a light-weight system, a single execution thread, an ingenious use of JavaScript anonymous functions for asynchronous callbacks, and a runtime library that ingeniously implemented asynchronicity. The initial pitch was high throughput event processing with event delivery to callback functions.

Then there is the JavaScript language itself. JavaScript programmers seem to have an esthetic of removing boilerplate so the programmers intention can shine clearly.

An example to contrast between Java and JavaScript is the implementation of listener functions. In Java. Listeners require creating a concrete instance of an abstract interface class. These entail a lot of verbiage obscuring what’s going on. How can the programmers intent be visible behind a veil of boilerplate?

In JavaScript one instead uses simple anonymous functions — closures. You don’t search for the correct abstract interface. Instead you simply write the required code, with no excess verbiage.

Another learning: Most programming languages obscure the programmers intent, making it harder to understand the code.

This point goes to Node.js — but with a caveat we must address: Callback Hell.

Solutions sometimes come with their own problems

In JavaScript we’ve long had two issues with asynchronous coding. One is what’s become called “Callback Hell” in Node.js. It is easy to fall into a trap of deeply nested callback functions, where each level of nesting complexifies the code, making error and results handling all the more difficult. A related issue is that the JavaScript language did not help the programmer properly express asynchronous execution.

Several libraries sprung up promising to simplify asynchronous execution. Another example of papering over complexity creating more complexity.

To give an example:

const async = require(‘async’);

const fs = require(‘fs’);

const cat = function(filez, fini) {

async.eachSeries(filez, function(filenm, next) {

fs.readFile(filenm, ‘utf8’, function(err, data) {

if (err) return next(err);

process.stdout.write(data, ‘utf8’, function(err) {

if (err) next(err);

else next();

});

});

},

function(err) {

if (err) fini(err);

else fini();

});

};

cat(process.argv.slice(2), function(err) {

if (err) console.error(err.stack);

});

This sample application is a pale cheap imitation of the Unix cat command. The async library is excellent at simplifying the sequencing of asynchronous execution. But its use required a pile of boilerplate code obscuring the programmers intention.

What we have here is a loop. It is not written as a loop, and it does not use natural looping constructs. Further, errors and results do not land in the natural place, but are inconveniently trapped inside callback functions. Before ES2015/2016 features landed in Node.js this was the best we could do.

The equivalent in Node.js 10.x is:

const fs = require(‘fs’).promises;

async function cat(filenmz) {

for (var filenm of filenmz) {

let data = await fs.readFile(filenm, ‘utf8’);

await new Promise((resolve, reject) => {

process.stdout.write(data, ‘utf8’, (err) => {

if (err) reject(err);

else resolve();

});

});

}

}

cat(process.argv.slice(2)).catch(err => {

console.error(err.stack);

});

This rewrite of the previous example uses async/await functions. It is the same asynchronous structure, but is written with normal looping structures. Errors and results are reported in a natural way. It’s easier to read, to code, and to understand the programmers intent.

The only wart is that process.stdout.write does not provide a Promise interface and therefore cannot be cleanly used in an async function without wrapping with a Promise.

The callback hell problem was not solved by papering over complexity. Instead a language and paradigm change solved both the problem and the excess verbiage forced on us by the interim solutions. With async functions our code became more beautiful.

While this started out as a point against Node.js the excellent solution converts this into a point for Node.js and JavaScript.

Supposed clarity through well defined types and interfaces

An issue I harped on as a died-in-the-wool Java advocate was that strict type checking enables writing huge applications. At that time the norm was developing monolithic systems (no microservices, no Docker, etc). Because Java has strict type checking, the Java compiler helps you avoid many kinds of bugs — by preventing you from compiling bad code.

JavaScript, by contrast, has loosey-goosey typing. The theory is obvious: The programmer has no certainty what kind of object they’ve received, so how can the programmer know what to do?

The flip side of strict typing in Java is more boilerplate. The programmer is constantly typecasting or otherwise working hard ensuring everything is precisely correct. The coder spends time coding, with extreme precision, using more boilerplate, hoping to save time by catching and fixing errors early.

The problem is so big that one more-or-less must use a big complex IDE. A simple programmers editor is not enough. The only way to keep the Java programmer sane (besides pizza) is with dropdowns showing the available fields on an object, describing method parameters, helping to construct classes, assisting with refactoring, and all the rest of the facilities offered by Eclipse, NetBeans, and IntelliJ.

And… don’t get me started on Maven. What a horrible tool.

In JavaScript, variable types are not declared, type-casting is generally not used, etc. Hence the code is cleaner to read, but there is a risk of uncaught coding errors.

Whether this point goes to Java or against Java depends on your point of view. My opinion ten years ago was this overhead is worth the cost by gaining more certainty. My opinion today is gosh that’s a lot of work, and it’s much easier to do things the JavaScript way.

Squashing bugs with small easily tested modules

Node.js encourages programmers to divide their programs into small units, the module. This may seem a small thing, but it partly addresses the problem just named.

A module is:

- Self contained — as implied, it packages related code into a unit

- Strong boundary — code inside the module is safe from intrusion from code elsewhere

- Explicit exports — by default code and data in the module is not exported, with selected functions and data available to other code

- Explicit imports — modules declare which modules they depend on

- Potentially independent — it is easy to publicly publish modules to the npm repository, or to privately publish modules elsewhere, for easy sharing between applications

- Easier to reason about — less code to read makes it easier to understand the purpose

- Easier to test — if implemented correctly, the small module can be easily unit tested

All of this together contributes to Node.js modules being easier to test and to have a well defined scope.

The fear with JavaScript is that lacking strict type checking the code could easily do something wrong. In a small focused module with clear boundaries, the scope of affected code is mostly limited to that module. This keeps most concerns small, safely ensconced within the boundary of that module.

The other solution to the loosey-goosey problem is increased testing.

You must spend some of the productivity gains (it is easier to write JavaScript code) on increased testing. Your testing regime must catch the errors that might have been caught by the compiler. You do test your code don’t you?

For those wanting statically checked types in JavaScript, look at TypeScript. I’ve not used that language, but have heard great things said about it. It is directly compatible with JavaScript and adds useful type checking and other features.

Here the point goes to Node.js and JavaScript.

Package management

I get apoplectic just thinking about Maven, and simply can’t think straight enough to write anything about it. Supposedly one either loves Maven, or despises it, and that there is no middle ground.

An issue is that the Java ecosystem does not have a cohesive package management system. Maven packages exist and work fairly well, and supposedly work in Gradle as well. But it isn’t anywhere near as useful/usable/powerful as the package management system for Node.js.

In the Node.js world there are two excellent package management systems that work closely together. At first npm, and the npm repository, was the only such tool.

With npm we have an excellent schema for describing package dependencies. A dependency can be strict (exactly version 1.2.3) or specified with several gradations of looseness all the way to “*” which means the latest version. The Node.js community has published hundreds of thousands of packages into the npm repository. Using packages from outside the npm repository is just as easy as using packages from the npm repository.

It is so good that npm’s repository serves not just Node.js but front end engineers. Previously tools like Bower for package management were used. Bower has been deprecated and now one finds all the front-end JavaScript libraries available as npm packages. Many front end engineer toolchains, like the Vue.js CLI and Webpack, are written in Node.js.

The other package management system for Node.js, yarn, draws its packages from the npm repository, and uses the same configuration files as npm. The main advantage is that the yarn tool runs faster.

The npm repository, whether accessed with npm or yarn, is a strong part of what makes Node.js so easy and joyful to use.

Sometime after helping create java.awt.Robot, I was inspired to create this. Where the official Duke mascot was entirely curvy lines, RoboDuke is all about straight lines, except for the gearing in the elbows.

Performance

At times both Java and JavaScript were accused of being slow.

In both cases compilers convert source code to byte-code executed by a virtual machine implementation. The VM will often further compile byte-codes to native code, and use a variety of optimization techniques.

Both Java and JavaScript have huge incentives to run fast. In Java and Node.js the incentive is fast server-side code. In browser-JavaScript the incentive is better client side application performance — see the next section on Rich Internet Applications.

The Sun/Oracle JDK uses HotSpot, a super-dooper virtual machine with multiple byte code compilation strategies. Its name comes from detecting frequently executed code and applying more and more optimization the more a code section executes. HotSpot is highly optimized and produces very fast code.

On the JavaScript side, we used to wonder: How could we expect JavaScript code running in browsers to implement any kind of complex application? Surely an office document processing suite would be impossible in browser-based JavaScript? Today, well, the proof of the pudding is in the eating. I’m writing this article using Google Docs, and the performance is pretty good. Browser-JavaScript performance leaps forward every year.

Node.js directly benefits from this trend since it uses Chrome’s V8 engine.

An example is this talk by Peter Marshall, a Google engineer working on V8 whose primary job is performance enhancements for V8. Marshall is specifically assigned to work on Node.js performance. He describes why V8 switched from the Crankshaft virtual machine to the Turbofan virtual machine.

Machine Learning is an area involving lots of mathematics for which data scientists typically use R or Python. Several fields including Machine Learning depend on fast numerical computing. For various reasons JavaScript is poor at this, but work is underway to develop a standardized library for numerical computing in JavaScript.

Another video demonstrates using TensorFlow in JavaScript, using a new library: TensorFlow.js. It has an API similar to TensorFlow Python, and can import pretrained models. It can be used for things like analyzing live video to recognize trained objects, whilst running completely in a browser.

In another talk, Chris Bailey of IBM goes over Node.js performance and scalability issues, especially in regard to Docker/Kubernetes deployment. He starts with a set of benchmarks showing that Node.js has dramatically better performance than Spring Boot on I/O throughput, application startup time and memory footprint. Further, release-by-release performance is improving dramatically in Node.js, thanks in part to V8 improvements.

In that video Bailey says one should not run computational code in Node.js. The “why” of that is important to understand. Because of the single thread model long-running computations will block event execution. In my book Node.js Web Development, I talk about this issue and demonstrate three approaches:

- Algorithmic refactoring — detect slow parts of an algorithm, refactoring for more speed

- Splitting chunks of the computation through the event dispatcher, so that Node.js regularly returns to the execution thread

- Shifting computations to a back-end server

If JavaScript improvements aren’t enough for your application, there are two ways to directly integrate native code into Node.js. The most straightforward method is a native-code Node.js module. The Node.js toolchain includes node-gyp which handles linking to native-code modules. This video demonstrates integrating a Rust library with Node.js:

WebAssembly offers the ability to compile other languages down to a JavaScript subset that runs very fast. WebAssembly is a portable format for executable code that runs inside a JavaScript engine. This video is a good overview, and demonstrates using WebAssembly to run code in Node.js.

Rich Internet Applications (RIA)

Ten years ago the software industry was buzzing about Rich Internet Applications implemented with fast (for the time) JavaScript engines that would make desktop applications irrelevant.

The story actually starts over twenty years ago. Sun and Netscape struck a deal for Java Applets in Netscape Navigator. The JavaScript language was, in part, developed as the scripting language for Java Applets. The hope was there would be Java Servlets on the server-side, and Java Applets on the client side, giving us all this nirvana condition of having the same programming language on both. That didn’t happen for various reasons.

Ten years ago JavaScript was starting to be powerful enough for implementing complex applications on its own. Hence the RIA buzzword, and RIA’s were supposed to kill Java as a client-side app platform.

Today we’re starting to see the RIA idea come to fruition. With Node.js on the server we can now have that nirvana, but with JavaScript at both ends of the wire.

Some examples:

- Google Docs (where this article is being written) is like a typical Office Suite, but running in the browser

- Powerful frameworks like React, Angular, Vue.js, simplify browser-based application development using HTML/CSS for styling

- Electron is an amalgam of Node.js and the Chromium web browser supports cross-platform desktop application development. It’s good enough that several extremely popular applications like Visual Studio Code, Atom, GitKraken, and Postman are all written in Electron, all of which perform extremely well.

- Because Electron/NW.js use a browser engine, frameworks like React/Angular/Vue can be used for desktop applications. For an example, see: https://blog.sourcerer.io/creating-a-markdown-editor-previewer-in-electron-and-vue-js-32a084e7b8fe

Java as a desktop application platform did not die because of JavaScript RIA’s. It died mostly because of neglect at Sun Microsystems for client technologies. Sun was focused on Enterprise customers demanding fast server-side performance. I was there and saw it first-hand. What really killed off Applets was a bad security bug discovered a few years ago in the Java Plugin and Java Web Start. That bug caused a worldwide alert to simply cease using Java applets and Webstart applications.

Other kinds of Java desktop applications can still be developed, and the competition between the NetBeans and Eclipse IDE’s is still alive and kicking as a result. But work in this area of Java is stagnant, and outside of developer tools there are very few Java-based applications.

The exception is JavaFX.

JavaFX was, 10 years ago, going to be Sun’s answer to the iPhone. It was going to support developing GUI-rich applications on the Java platform available in cell phones, and would single-handedly put Flash and iOS application development out of business. That didn’t happen. JavaFX is still used, but did not live up to its hype.

All of the excitement in this field is happening with React, Vue.js, and similar frameworks.

In this case JavaScript and Node.js won the point in a big way.

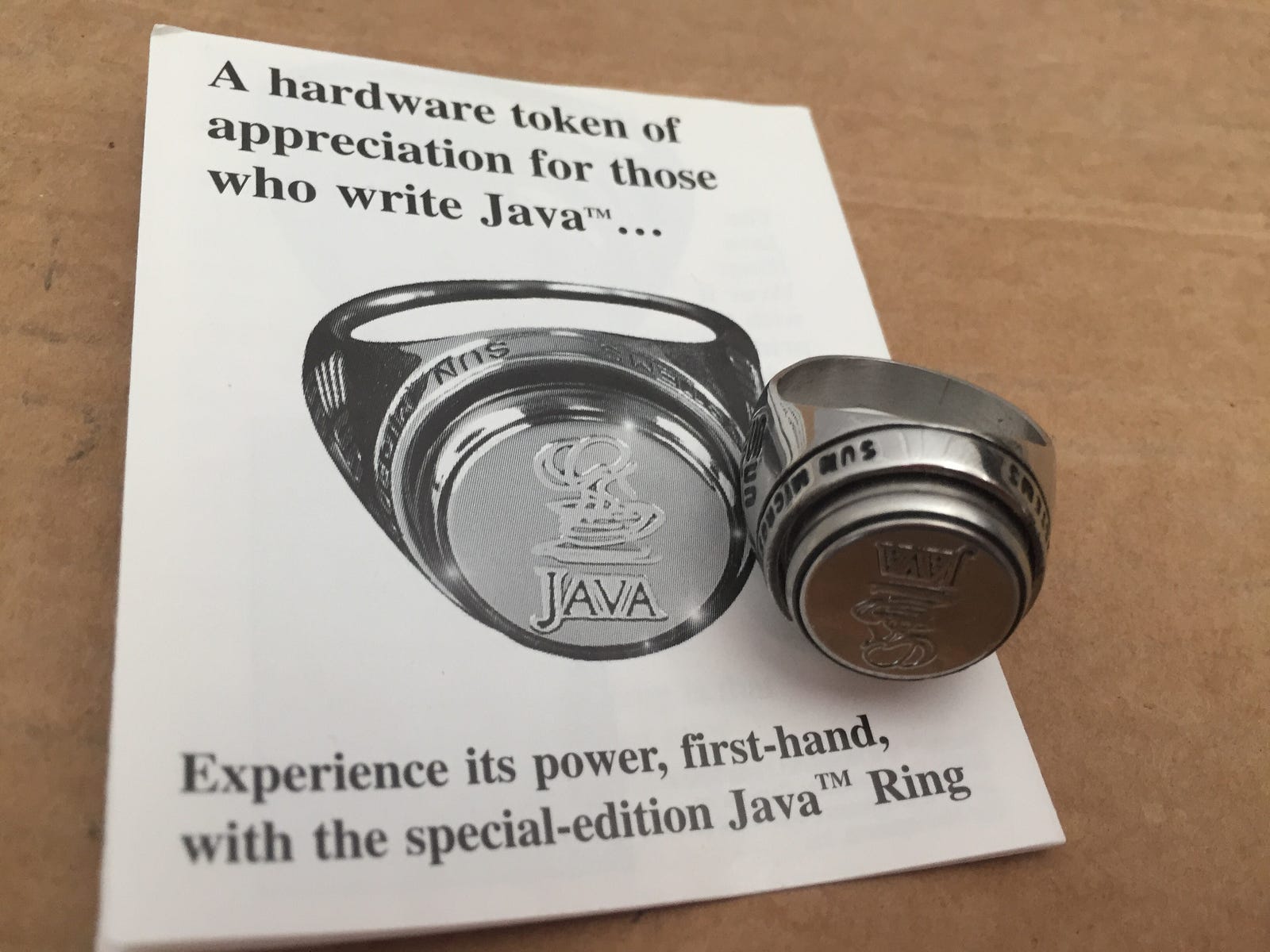

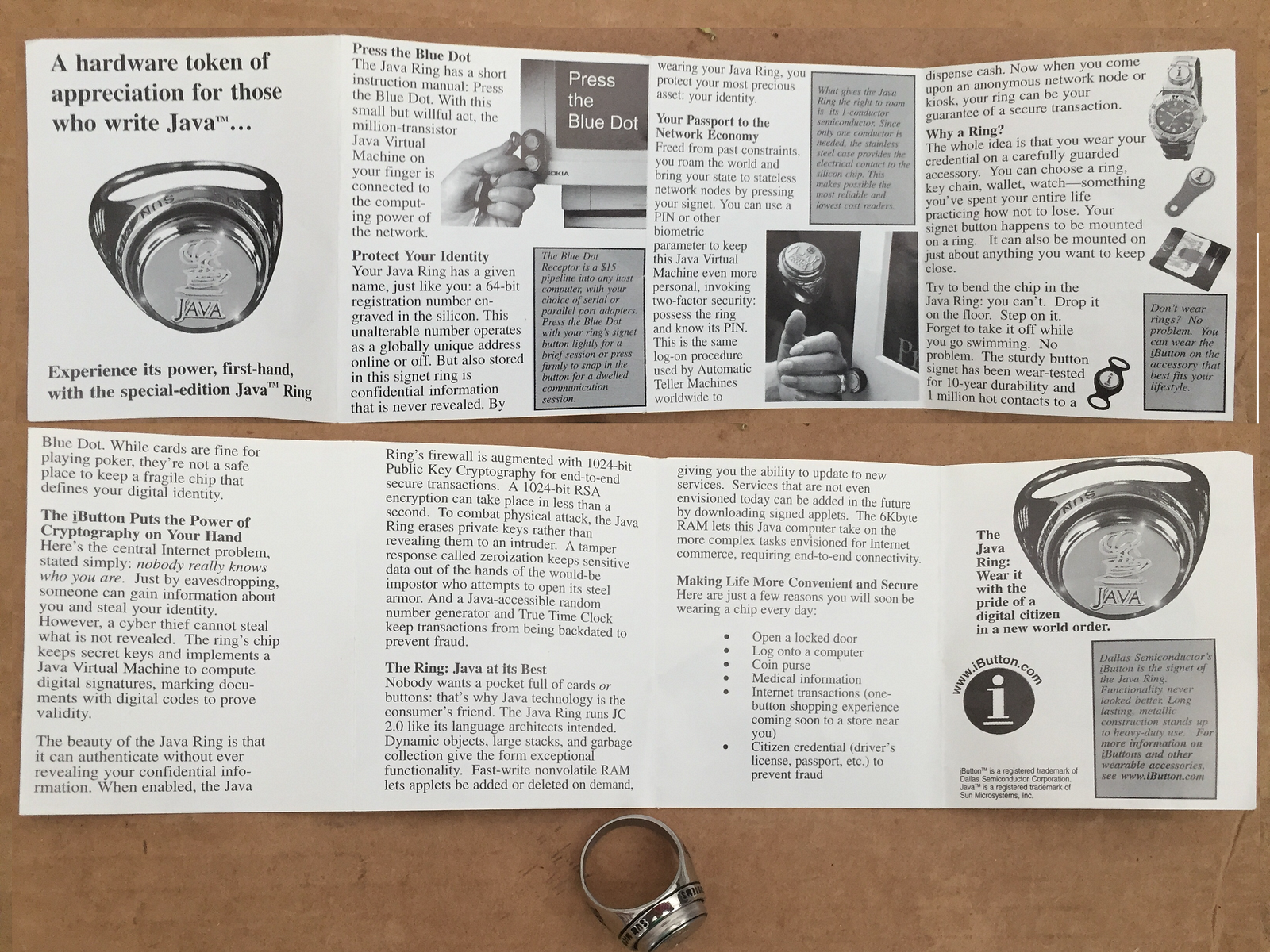

The Java Ring, handed out at an early Java ONE conference. These rings contain a chip with a complete Java implementation on-board. The primary use at JavaONE was unlocking the computers we installed in the lobby.

Java Ring instructions.

Conclusion

Today there are many choices for developing server-side code. We’re no longer limited to the “P languages” (Perl, PHP, Python) and Java, because there is Node.js, Ruby, Haskell, Go, Rust, and many more. Therefore we have an embarrassment of riches to enjoy.

In terms of why did this Java guy turn to Node.js, it’s clear that I prefer the feeling of freedom while coding with Node.js. Java became a burden, and with Node.js there is no such burden. If I were employed to write Java again I would do it, of course, because that’s what I’d be paid to do.

Each application has its authentic needs. It’s of course incorrect to always use Node.js because one prefers Node.js. There must be a technical reason to choose one language or framework over another. For example some work I’ve done recently involves XBRL documents. Since the best XBRL libraries are implemented in Python, it’s necessary to learn Python to continue with that project. Make an honest evaluation of your real needs and choose accordingly.

Thanks for reading ❤

If you liked this post, share it with all of your programming buddies!

Follow me on Facebook | Twitter

Learn More

☞ Java Programming Masterclass for Software Developers

☞ The Complete Node.js Developer Course (3rd Edition)

☞ Angular & NodeJS - The MEAN Stack Guide

☞ The Complete JavaScript Course 2019: Build Real Projects!

☞ Vue JS 2 - The Complete Guide (incl. Vue Router & Vuex)

☞ Google’s Go Essentials For Node.js / JavaScript Developers

☞ A Beginner’s Guide to JavaScript’s Prototype

☞ Top 12 Javascript Tricks for Beginners

☞ Spring Boot + JPA + Hibernate + Oracle

☞ Top 5 Java Test Frameworks for Automation in 2019

☞ A Study List for Java Developers

#java #node-js #javascript