Have you ever wondered about fine-tuning your hyperparameters? How to choose your hyperparameters to achieve an excellent predicting model with approximately 90% accuracy?

There are plenty of articles that will talk about hyperparameter and other key concepts of deep learning. But I could not find something which connects all these concepts and talk about how to use them. The idea for this blog came up when I was struggling to improve my algorithm to predict better and could not find one place where I can get all my answers. So here I will talk about all things I learned while solving deep learning problems.

My learnings

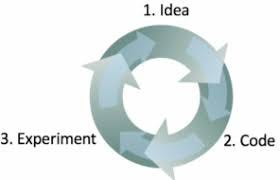

Have you ever struggled with deciding the right values of hyperparameters? Everyone does. Even people with tremendous experience in this field find it difficult to correctly guess the right choice of hyperparameters the very first time. Deep learning is an iterative process where you just have to go around this cycle many times to find the right choice of hyperparameters.

What to do now? We can speed up the iterative cycle to get quick feedback about our chosen hyperparameters and use those feedback to improve hyperparameters value. How to speed up the training process to get fast feedback? How to use the feedback received to enhance hyperparameters?

Speed up the training process

There are multiple ways to do this.

- Normalizing inputs. When we normalize inputs, we can use a high learning rate while making gradient descent to reach a global minimum in less time. Unnormalized data oscillate back and forth so much that we have to use a small learning rate.

- Another way to speed up the training process is how you split up your data into the training set, validation set and test set. People make a mistake to take a ratio of 60:20:20. It’s a good ratio if you have a small number of data, i.e. 10k But if you have 1M data then rate of 98:1:1 would be enough to validate your model. You can also try Stratified sampling of the dataset to reduce time.

- Beginners make a mistake to validate their model at every 5th/10th iteration out of 1000 iterations. Proving so often will slow down iterations. Verify at larger intervals to quickly complete the cycle. Verification at 100th/200th step would do the work.

- Deep learning algorithms work well with a big dataset. But to process the whole dataset in each iteration to take a step in gradient descent to reach global minimum would make hyperparameters tuning slow. You can use mini-batch gradient descent to make it faster. If you have a dataset of 10M. You can divide it into a batch of 2000 datasets. Now you have 5000 batches. Now instead of waiting for 10M data to process for taking one step, you can take a step towards global minimum in each batch. Now you have taken 5000 steps compared to 1 step after iterating over 10M dataset.

- Another way to speed up your learning is Gradient descent with momentum and RMSProp. It will slow down your oscillation vertically and increase it horizontally which help you to reach your goal faster. Combination of Gradient descent and RMSProp is called Adam optimization algorithm.

- We can use learning rate decay to slow down learning when we reach near our goal to have very small oscillation and reach to point quickly.

So like I said earlier you could not know in the beginning what hyperparameter to choose, but you can control how quickly you take to adjust your hyperparameters.

#hyperparameter-tuning #machine-learning #deep-learning #deep learning