Suppose you have applied advanced machine learning techniques to your google analytics hit level data to enrich it. And now you want to send that enhanced data to your google analytics so that you can segment and target those enriched users for reactivation and other marketing objectives.

In this walkthrough guide, I will demonstrate how to set up a data pipeline that will allow you to automatically import your data to google analytics using the Google cloud platform. We will be using Google Tentacle to set up the data pipeline.

Google Tentacle is a free-to-use solution that helps to send data back to google analytics in an automated fashion. All you have to do is upload your data to cloud storage and the solution will send that data to google analytics in the format required by Google analytics.

Note: This solution extensively uses google cloud components, therefore, if you want to learn more about the GCP side of things then I would recommend Google’s GCP courses as a good starting point.

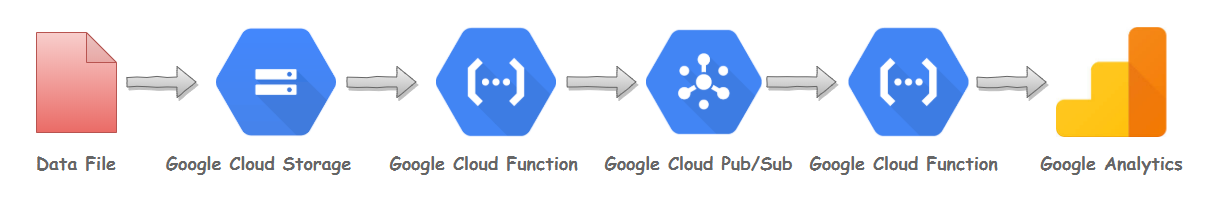

Data Pipeline

Data Import Pipeline, by Muffaddal

Tentacle uses four of the GCP’s component namely Cloud Storage, Firestore/Datastore, and Pub/Sub, and Cloud Function.

**Cloud Storage **is used to store the data that is needed to be imported. It is where you will upload the offline data that you want to send to GA. Data needs to be in a specific format for GA to read it. More on data format in later sections.

**Firestore or Datastore **is used for the configurations. It tells tentacles which GA account to send data to and how. Depending upon your GCP project configurations you will either be using Firestore or Datastore. By default tentacles used Firestore but if your project is set up to use Datastore then tentacles will use that.

**Pub/Sub **is used to hold, notifies, and move the data. There are three pub/sub being used. First isps-data. It holds the data temporarily sent from cloud function. Second isps-tran. It notifies cloud function to read data from ps-data pub/sub. Third isps-api. This pub/sub is responsible for passing data between pub/subs.

**Cloud Function **is where the action happens. There are three cloud functions being used. First is initiator. It triggers whenever a file is uploaded to the cloud bucket. It sends the uploaded data to ps-data pub/sub. Second is transporter. It is triggered by ps-tran and transfers data from ps-data to ps-api pub/sub. Last cloud function is apirequester. It grabs data from ps-api and sends it to the measurement protocol.

Here is how data moves between components.

#gcp #web-analytics #google-analytics #data #bigquery #data analysis