Testing and comparing Android’s ML acceleration with a MobileDet model

As the requirements for more private and fast, low-latency machine learning increases, so does the need for more accessible and on-device solutions capable of performing well on the so-called “edge.” Two of these solutions are the Pixel Neural Core (PNC) hardware and its Edge TPU architecture currently available on the Google Pixel 4 mobile phone, and The Android Neural Networks API (NNAPI), an API designed for executing machine learning operations on Android devices.

In this article, I will show how I modified the TensorFlow Lite Object Detection demo for Android to use an Edge TPU optimized model running under the NNAPI on a Pixel 4 XL. Additionally, I want to present the changes I did to log the prediction latencies and compare those done using the default TensorFlow Lite API and the NNAPI. But before that, let me give a brief overview of the terms I’ve introduced so far.

The app detecting one of my favorite books. Pic by me.

Pixel Neural Core, Edge TPU and NNAPI

The Pixel Neural Core, the successor of the previous Pixel Visual Core, is a domain-specific chip that’s part of the Pixel 4 hardware. It’s architecture, follows that of the Edge TPU (tensor processing unit), Google’s machine learning accelerator for edge computing devices. Being a chip designed for “the edge” means that it is smaller and more energy-efficient (it can perform 4 trillion operations per second while consuming just 2W) than its big counterparts you will find in Google’s cloud platform.

The Edge TPU, however, is not an overall accelerator for all kinds of machine learning. The hardware is designed to improve forward-pass operations, meaning that it excels as an inference engine and not as a tool for training. That’s why you will mostly find applications where the model used on the device, was trained somewhere else.

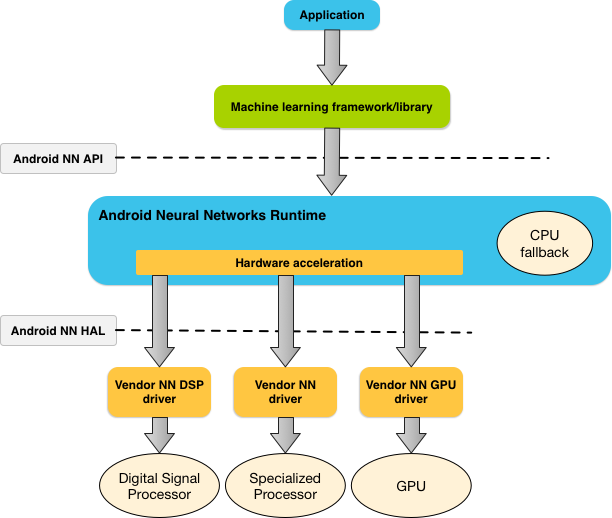

On the software side of things, we have the NNAPI. This Android API, written in C, provides acceleration for TensorFlow Lite models on devices that employ hardware accelerators such as the Pixel Visual Core and GPUs. The TensorFlow Lite framework for Android includes an NNAPI delegate, so don’t worry, we won’t write any C code.

Figure 1. System architecture for Android Neural Networks API. Source: https://developer.android.com/ndk/guides/neuralnetworks

The model

The model we will use for this project is the float32 version of the MobileDet object detection model optimized for the Edge TPU and trained on the COCO dataset (link). Let me quickly explain what these terms mean. MobileDet (Xiong et al.) is a very recent state-of-the-art family of lightweight object detection models for low computational power devices like mobile phones. This float32 variant means that it is not a quantized model, a model that has been transformed to reduce its size at the cost of model accuracy. On the other hand, a fully quantized model uses small weights based on 8 bits integers (source). Then, we have the COCO dataset, short for “Common Objects in Context” (Lin et al.). This collection of images has over 200k labeled images separated across 90 classes that include “bird, “cat,” “person,” and “car.”

Now, after that bit of theory, let’s take a look at the app.

#tensorflow #android #machine-learning #artificial-intelligence #programming