Disclaimer: I am the developer behind Model Zoo, a model deployment platform focused on ease-of-use.

Try our tool at

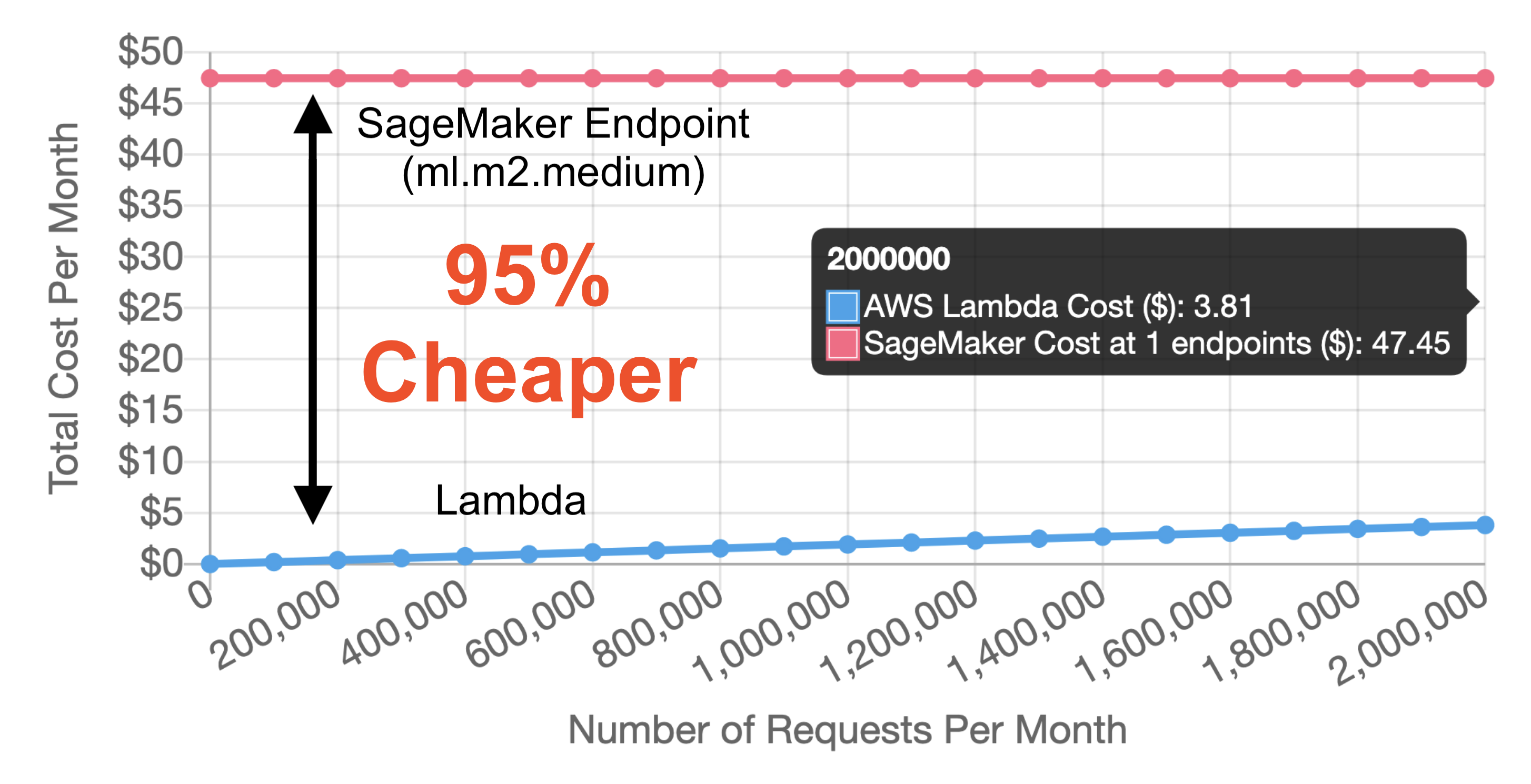

If you’re an AWS customer that needs to deploy machine learning models for real-time inference, you might have considered using AWS SageMaker Inference Endpoints. However, there is another option for model deployment that is sometimes overlooked: deploying directly on AWS Lambda. Although it comes with some caveats, the simplicity and cost-efficiency of Lambda make it worthwhile to consider over SageMaker endpoints for model deployment, especially when using scikit-learn, xgboost, or spaCy. In this article, we’ll go over some of the benefits and caveats of using AWS Lambda for ML inference and dive into some relevant benchmarks. We show that in scenarios of low usage (<2M predictions per month), you can save up to **95% on infrastructure costs **when moving models from SageMaker to Lambda. We’ll also present scikit-learn-lambda, our open-source toolkit for easily deploying scikit-learn on AWS Lambda.

What are AWS SageMaker Inference Endpoints?

AWS infrastructure diagram for realtime ML inference via SageMaker endpoints

SageMaker inference endpoints are one of many pieces of an impressive end-to-end machine learning toolkit offered by AWS, from data labeling (AWS SageMaker Ground Truth) to model monitoring (AWS SageMaker Model Monitor). SageMaker inference endpoints offer features around GPU acceleration, autoscaling, AB testing, integration with training pipelines, and integration with offline scoring (AWS Batch Transform). These features come at a steep cost — the cheapest possible inference endpoint (ml.t2.medium) will run you $50/month to run 24/7. The next best endpoint (ml.t2.xlarge) is $189.65/month.

What is AWS Lambda?

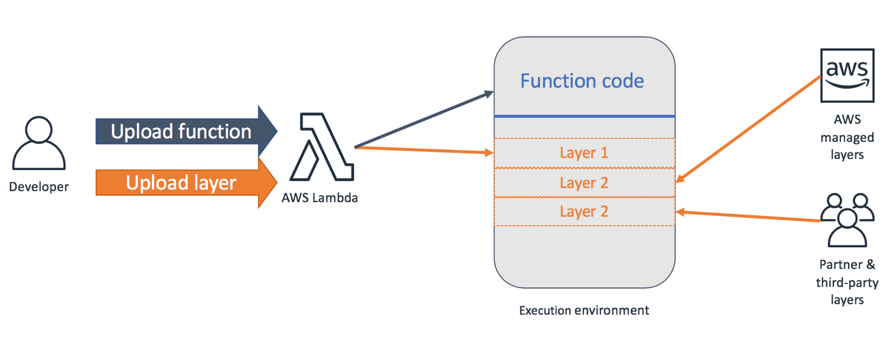

AWS Lambda is a generic serverless computing platform

AWS Lambda is a pioneer of the serverless computing movement, letting you run arbitrary functions without provisioning or managing servers. It executes your code only when needed and scales automatically, from a few requests per day to hundreds per second. Lambda is a generic function execution engine without any machine learning specific features. It has inspired a growing community of tooling, some from AWS themselves (Serverless Application Model) and some externally affiliated (Serverless framework).

Benefits of AWS Lambda for realtime machine learning inference

- Lambda has a pay-per-request model that scales with your growth. Whether this is a pro or con depends on your usage level, but is especially cost-efficient for serving under 2M predictions per month. SageMaker, as of this writing, does not support auto-scaling to zero during periods of low activity.

- Lambda requires a simpler implementation computational model where concurrency/autoscaling can be handled transparently outside of your logic.

- Lambda requires lower maintenance effort, without any underlying servers or logic to manage.

- Lambda has a rich developer ecosystem (both open-source and SaaS) for monitoring, logging, and testing serverless applications, such as Serverless. With SageMaker, you’re relying on AWS-specific resources such as the SageMaker-compatible containers and SageMaker Python SDK for tooling.

#scikit-learn #mlops #machine-learning #sagemaker #lambda #deep learning