What is Model Stacking?

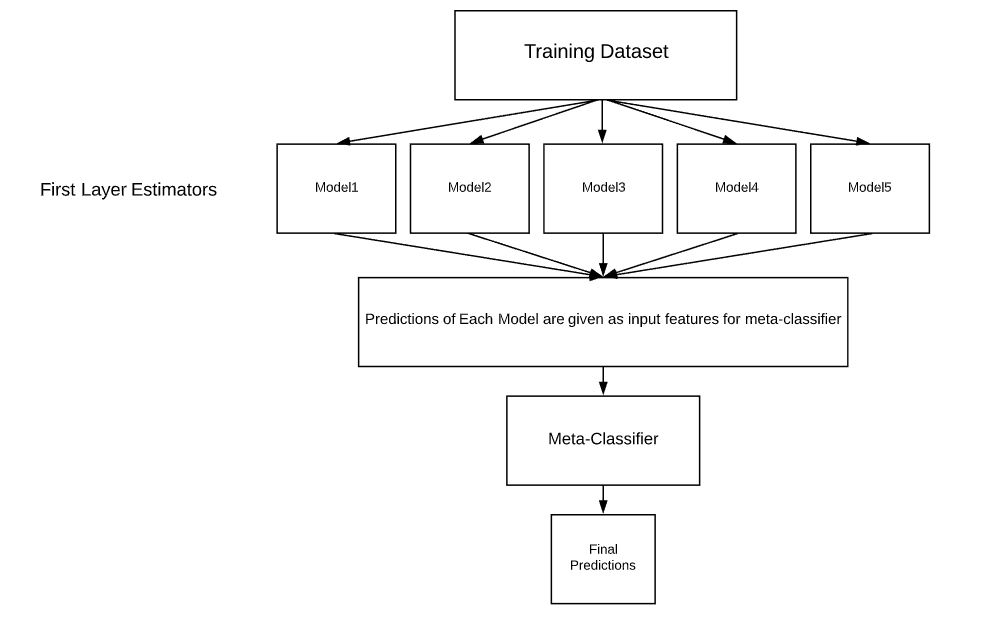

Model Stacking is a way to improve model predictions by combining the outputs of multiple models and running them through another machine learning model called a meta-learner. It is a popular strategy used to win kaggle competitions, but despite their usefulness they’re rarely talked about in data science articles — which I hope to change.

Essentially a stacked model works by running the output of multiple models through a “meta-learner” (usually a linear regressor/classifier, but can be other models like decision trees). The meta-learner attempts to minimize the weakness and maximize the strengths of every individual model. The result is usually a very robust model that generalizes well on unseen data.

The architecture for a stacked model can be illustrated by the image below:

#tensorflow #neural-networks #model-stacking #how to use “model stacking” to improve machine learning predictions #model stacking #machine learning