Learn how to use Kubeflow to set up machine learning orchestration on Kurbetenes for your AI projects.

MLOps: From Proof Of Concepts to Industrialization

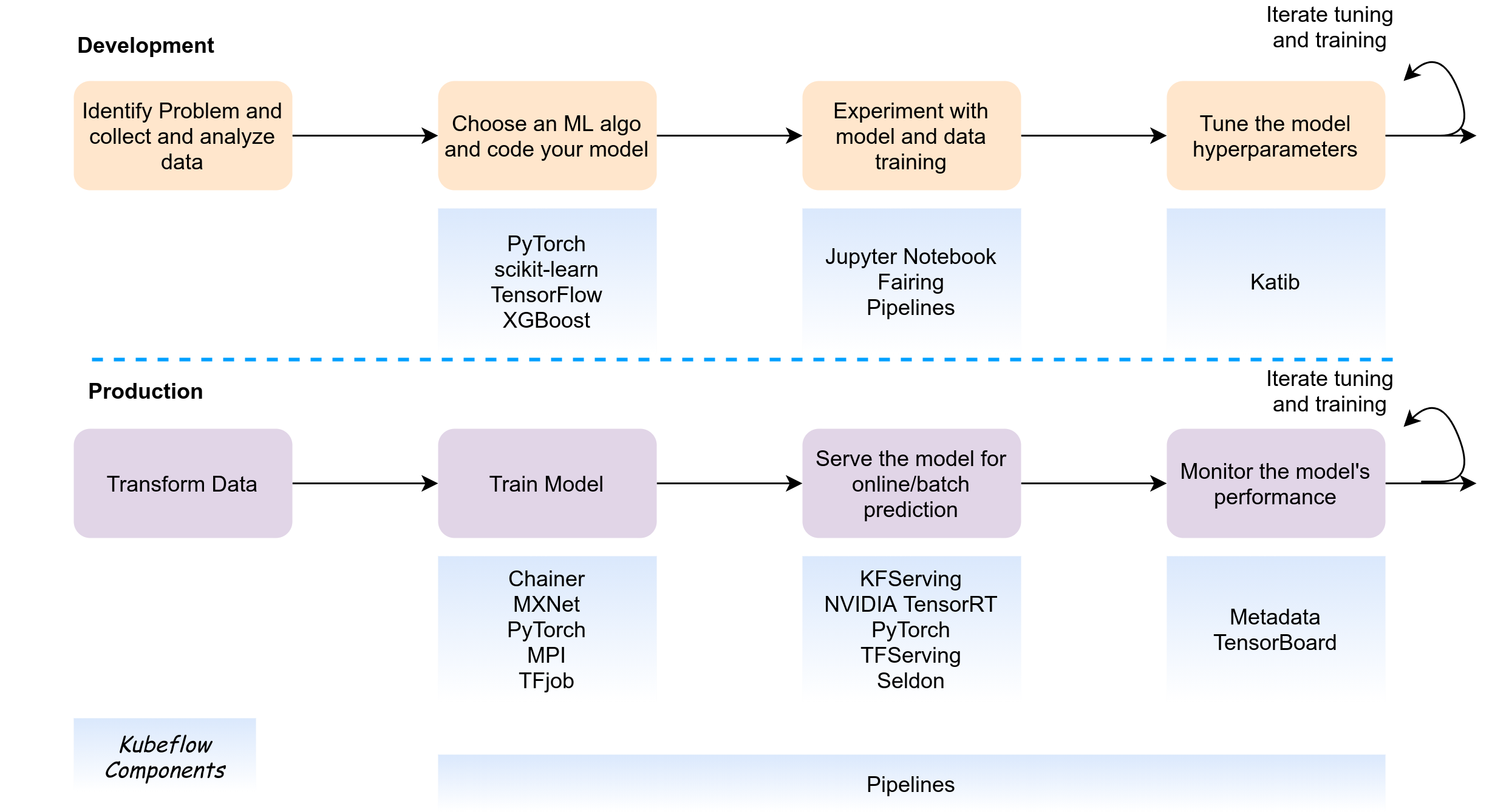

In recent years, AI and Machine Learning have seen tremendous growth across industries in various innovative use cases. It is the most important strategic trend for business leaders. When we dive into a technology, the first step is usually experimentation on a small scale and for very basic use cases, then the next step is to scale up operations. Sophisticated ML models help companies efficiently discover patterns, uncover anomalies, make predictions and decisions, and generate insights, and are increasingly becoming a key differentiator in the marketplace. Companies recognise the need to move from proof of concepts to engineered solutions, and to move ML models from development to production. There is a lack of consistency in tools and the development and deployment process is inefficient. As these technologies mature, we need operational discipline and sophisticated workflows to take advantage and operate at scale. This is popularly known as MLOps or ML CI/ CD or ML DevOps. In this article, we explore how this can be achieved with the Kubeflow project, which makes deploying machine learning workflows on Kubernetes simple, portable, and scalable.

MLOps in Cloud Native World

There are Enterprise ML platforms like Amazon SageMaker, Azure ML, Google Cloud AI, and IBM Watson Studio in public cloud environments. In case of on-prem and hybrid open source platform, the most notable project is Kubeflow.

What is Kubeflow?

Kubeflow is a curated collection of machine learning frameworks and tools. It is a platform for data scientists and ML engineers who want to experiment with their model and design an efficient workflow to develop, test and deploy at scale. It is a portable, scalable, and open-source platform built on top of Kubernetes by abstracting the underlying Kubernetes concepts.

Kubeflow Architecture

Kubeflow utilizes various cloud native technologies like Istio, Knative, Argo, Tekton, and leverage Kubernetes primitives such as deployments, services, and custom resources. Istio and Knative help provide capabilities like blue/green deployments, traffic splitting, canary releases, and auto-scaling. Kubeflow abstracts the Kubernetes components by providing UI, CLI, and easy workflows that non-kubernetes users can use.

For the ML capabilities, Kubeflow integrates the best framework and tools such as TensorFlow, MXNet, Jupyter Notebooks, PyTorch, and Seldon Core. This integration provides data preparation, training, and serving capabilities.

Let’s look at Kubeflow Components

- Central Dashboard: User interface for managing all the Kubeflow pipeline and interacting with various components.

- Jupyter Notebooks: It allows to collaborate with other team members and develop the model.

- Metadata - It helps in organizing workflows by tracking and managing the metadata in the artifacts. In this context, metadata means information about executions (runs), models, datasets, and other artifacts. Artifacts are the files and objects that form the inputs and outputs of the components in your ML workflow.

- Fairing: It allows running training job remotely by embedding it in Notebook or local python code and deploy the prediction endpoints.

- Feature Store (Feast): It helps in feature sharing and reuse, serving features at scale, providing consistency between training and serving, point-in-time correctness, maintaining data quality and validation.

- ML Frameworks: This is a collection of frameworks including, Chainer (deprecated), MPI, MXNet, PyTorch, TensorFlow, providing

- Katib: It is used to implement Automated machine learning using Hyperparameters (variables to control the model training process), Neural Architecture Search (NAS) to improve predictive accurancy and performance of the model, and a web UI to interact with Katib.

- Pipelines: Provides end-to-end orchestration and easy to reuse solution to ease the experimentations.

- Tools for Serving: There are two model serving systems that allow multi-framework model serving: KFServing, and Seldon Core. You can read more about tools for serving here.

#devops #kubernetes #tensorflow #kubeflow #machine-learning