In vanilla federated learning [1], the centralized server will send a global model to each participant before training takes place. After every round of federated training, the participants send back its local gradient to the global model and the server updated it with the average of all the local gradients. Hence, the participants involved in the federated learning process only obtained a generalized global model with no respect for any personalization of their data. One of the challenges in federated learning is data and device heterogeneity, this can pose a problem when a user has rich data but is unable to customize the global model to take advantage of its own personalization (Since gradients are averaged, the averaging effects can get drown out by other gradients other than it’s own).

With statistical or data heterogeneity, different participants have different data. In order to have a personalized model such as different models created for different participants, statistical heterogeneity needs to be addressed first, which implicitly leads to model heterogeneity. To tackle statistical heterogeneity is to have an individual model for each participant, however, we also need to ensure that the individual model converges to a true global model which is not possible with simple averaging due to client drift.

In this paper [3], the author focus on a different type of heterogeneity, which is the differences in local models. The author explored and applied two techniques such as transfer learning and knowledge distillation [2] into federated learning. This allows the global model to be universal and also allows every participant to have a customized model with personalization.

One trivial example without personalization is, assuming we are training a federated learning model for a food recommendation with two participants A and B as shown in Fig 1. Participant A only has data for fruits, participant B only has data for beverages. Clearly both participants have data drawn from different distributions. Hence, using simple averaging of the gradients doesn’t make any of the models unique, as participant A only wants fruit personalization, and participant B only wants drinks personalization and not a mixture of both.

FedMD framework

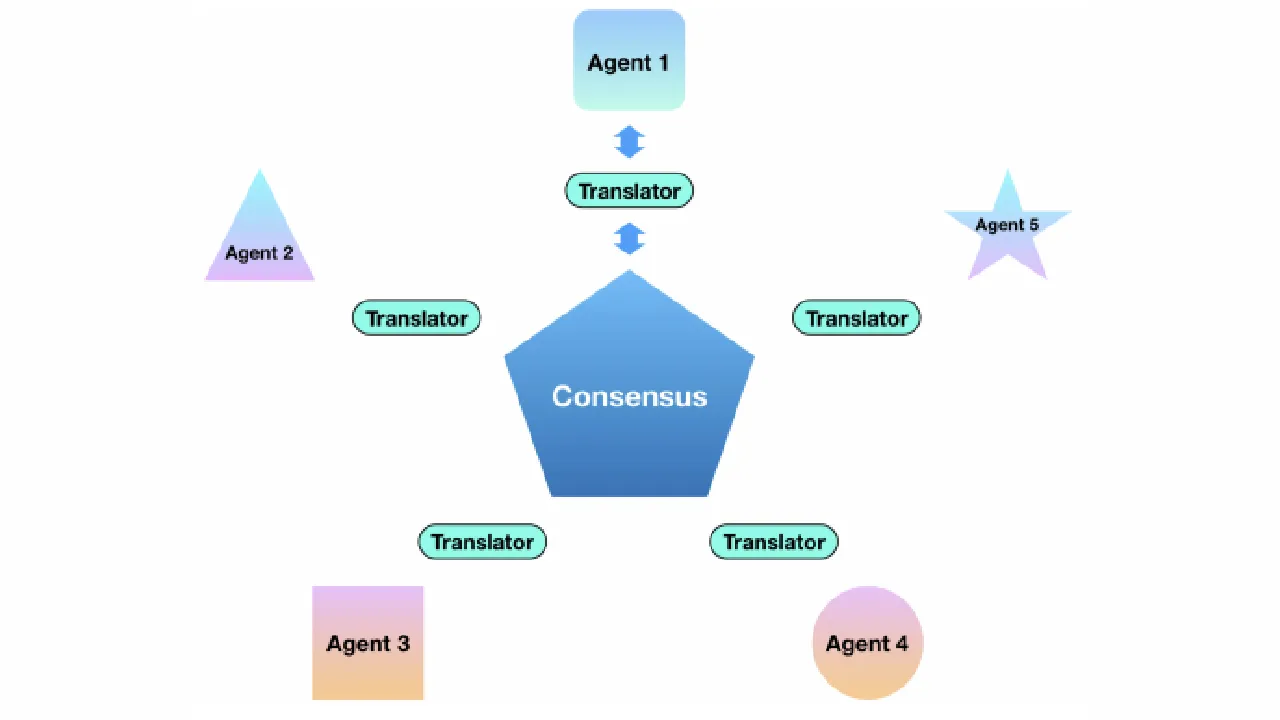

The author proposes a framework called “FedMD” which allows transfer learning and knowledge distillation to be incorporated into federated learning Fig 2. below.

Transfer learning

The reason for using transfer learning is the scarcity of private data since private datasets can be small and if we can leverage transfer learning on a large public dataset it would be extremely beneficial to the model.

Knowledge distillation

With knowledge distillation [2], the learned knowledge is communicated based on class scores or probability scores. These newly computed class scores will be used as the new target for the dataset, and with these approaches, we can train any agnostic model to leverage the knowledge learned from one model into another model.

#deep-learning #federated-learning #machine-learning