In other words, we want to find an embedding for each word in some vector space and we wanted to exhibit some desired properties.

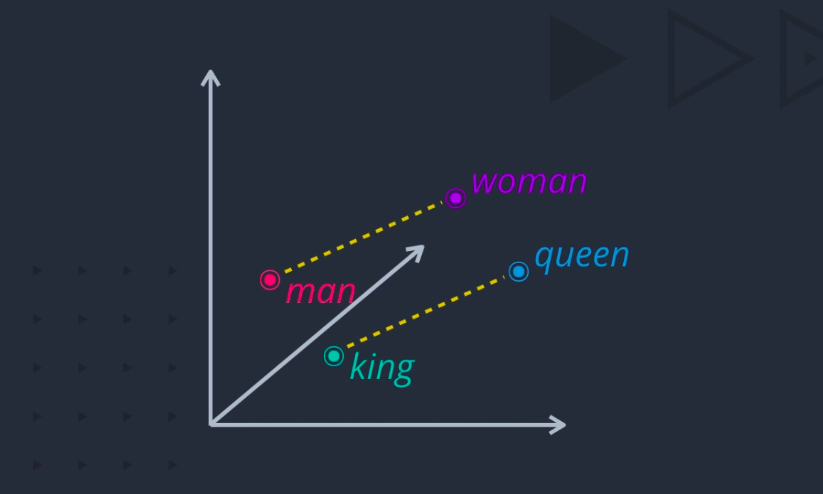

Representation of different words in vector space (Image by author)

For example, if two words are similar in meaning, they should be closer to each other compared to words that are not_. And, if two pair of words have a similar difference in their meanings, _they should be approximately equally separated in the embedded space.

We could use such a representation for a variety of purposes like finding synonyms and analogies, identifying concepts around which words are clustered, classifying words as positive, negative, neutral, etc. By combining word vectors, we can come up with another way of representing documents as well.

Word2Vec — The General Idea

Word2Vec is perhaps one of the most popular examples of word embeddings used in practice. As the name Word2Vec indicates, it transforms words to vectors. But what the name doesn’t give away is how that transformation is performed.

#machine-learning #data-science #nlp #word-embeddings #artificial-intelligence