After training a machine learning classifier, the next step is to evaluate its performance using relevant metric(s). The confusion matrix is one of the evaluation metrics.

A confusion matrix is a table showing the performance of a classifier given some truth values/instances (supervised learning kind of).

But calculating of confusion matrix for object detection and instance segmentation tasks is less intuitive. First, it is necessary to understand another supporting metric: Intersection over Union (IoU). A key role in calculating metrics for object detection and instance segmentation tasks is played by Intersection over Union (IoU).

Intersection over Union (IoU)

IoU, also called** Jaccard index**, is a metric that evaluates the overlap between the ground-truth mask (gt) and the predicted mask (pd). In object detection, we can use IoU to determine if a given detection is valid or not.

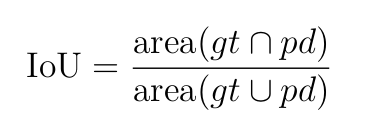

IoU is calculated as the area of overlap/intersection between gt and pd divided by the area of the union between the two, that is,

Diagrammatically, IoU is defined as shown below:

Fig 1 (Source: Author)

Note: IoU metric ranges from 0 and 1 with 0 signifying no overlap and 1 implying a perfect overlap between gt and pd.

A confusion matrix is made up of 4 components, namely, True Positive (TP), True Negative (TN), False Positive (FP) and False Negative (FN). To define all the components, we need to define some threshold (say α) based on IoU.

#python #confusion-matrix #data-science #machine-learning #classification